An Commence Letter In opposition to Apple’s Privateness-Invasive Say Scanning Abilities

Dear Apple,

On August fifth, 2021, Apple Inc. presented contemporary technological measures supposed to apply staunch thru on the subject of all of its devices beneath the umbrella of “Expanded Protections for Teenagers”. Whereas youngster exploitation is a severe whisper, and whereas efforts to fight it are practically for sure effectively-intentioned, Apple’s proposal introduces a backdoor that threatens to undermine elementary privacy protections for all customers of Apple products.

Apple’s proposed abilities works by consistently monitoring all photos kept or shared on a person’s iPhone, iPad or Mac, and notifying the authorities if a obvious series of objectionable photos is detected.

Straight after Apple’s announcement, specialists around the globe sounded the terror on how Apple’s proposed measures might perhaps turn every iPhone into a instrument that is consistently scanning all photos and messages that pass thru it in speak to portray any objectionable content material to regulation enforcement, setting a precedent the place our private devices become a radical contemporary instrument for invasive surveillance, with minute oversight to forestall eventual abuse and unreasonable growth of the scope of surveillance.

The Electronic Frontier Foundation has mentioned that “Apple is opening the door to broader abuses”:

“It’s now not seemingly to originate a consumer-facet scanning system that can only be mature for sexually explicit photos despatched or obtained by younger folks. As a consequence, even a effectively-intentioned effort to originate such a system will spoil key guarantees of the messenger’s encryption itself and initiate the door to broader abuses […] That’s now not a slippery slope; that’s a fully built system staunch making an try ahead to external tension to make the slightest switch.”

The Heart for Democracy and Abilities has mentioned that it is “deeply concerned that Apple’s changes in the end create contemporary risks to younger folks and all customers, and mark a predominant departure from long-held privacy and security protocols”:

“Apple is replacing its replace-current end-to-end encrypted messaging system with an infrastructure for surveillance and censorship, that can be inclined to abuse and scope-disappear now not only within the U.S., nonetheless around the globe,” says Greg Nojeim, Co-Director of CDT’s Safety & Surveillance Project. “Apple ought to silent abandon these changes and restore its customers’ religion within the security and integrity of their info on Apple devices and products and providers.”

Dr. Carmela Troncoso, a number one learn educated in Safety & Privateness and professor at EPFL in Lausanne, Switzerland, has mentioned that whereas “Apple’s contemporary detector for youngster sexual abuse area matter (CSAM) is promoted beneath the umbrella of sweet sixteen protection and privacy, it is a agency step in the direction of prevalent surveillance and assist watch over”.

Dr. Matthew D. Inexperienced, one other leading learn educated in Safety & Privateness and professor on the Johns Hopkins University in Baltimore, Maryland, has mentioned that “the day old to this we had been step by step headed in the direction of a future the place less and never more of our info wanted to be beneath the assist watch over and review of anybody nonetheless ourselves. For the first time for the explanation that 1990s we had been taking our privacy assist. Nowadays we’re on a assorted route”, adding:

“The tension goes to approach from the UK, from the US, from India, from China. I’m terrified about what that’s going to behold appreciate. Why Apple would should always explain the area, ‘Howdy, we’ve bought this instrument’?”

Sarah Jamie Lewis, Govt Director of the Commence Privateness Study Society, has warned that:

“If Apple are successful in introducing this, how long attain you specialise in it ought to be old to the identical is predicted of other providers? Sooner than walled-garden prohibit apps that place now not attain it? Sooner than it is enshrined in regulation? How long attain you specialise in it ought to be old to the database is expanded to comprise “terrorist” content material”? “unhealthy-nonetheless-just correct” content material”? mutter-particular censorship?”

Dr. Nadim Kobeissi, a researcher in Safety & Privateness components, warned:

”Apple sells iPhones without FaceTime in Saudi Arabia, attributable to native regulation prohibits encrypted phone calls. That is staunch one instance of many the place Apple’s curved to native tension. What happens when native regulations in Saudi Arabia mandate that messages be scanned now not for youngster sexual abuse, nonetheless for homosexuality or for offenses in opposition to the monarchy?”

The Electronic Frontier Foundation’s assertion on the whisper supports the above disaster with extra examples on how Apple’s proposed abilities might perhaps lead to global abuse:

“Take the instance of India, the place currently passed suggestions comprise unhealthy requirements for platforms to name the origins of messages and pre-hide content material. Unique guidelines in Ethiopia requiring content material takedowns of “misinformation” in 24 hours might perhaps apply to messaging products and providers. And plenty other worldwide locations—customarily those with authoritarian governments—relish passed equal guidelines. Apple’s changes would allow such screening, takedown, and reporting in its end-to-end messaging. The abuse instances are easy to agree with: governments that outlaw homosexuality might perhaps require the classifier to be trained to restrict obvious LGBTQ+ content material, or an authoritarian regime might perhaps ask the classifier be ready to station approved satirical photos or deliver flyers.”

Furthermore, the Electronic Frontier Foundation insists that or now not it is already viewed this mission disappear in motion: “for sure seemingly the most applied sciences before the total lot built to scan and hash youngster sexual abuse imagery has been repurposed to create a database of “terrorist” content material that companies can make a contribution to and entry for the explanation of banning such content material. The database, managed by the World Web Forum to Counter Terrorism (GIFCT), is troublingly without external oversight, despite calls from civil society.”

Main create flaws in Apple’s proposed ability relish moreover been identified by specialists, who relish claimed that “Apple can trivially utilize assorted media fingerprinting datasets for one and all. For one person it could perhaps very effectively be youngster abuse, for one other it could perhaps very effectively be a wider category”, thereby enabling selective content material tracking for targeted customers.

The variety of craftsmanship that Apple is proposing for its youngster protection measures is dependent upon an expandable infrastructure that can now not be monitored or technically shrimp. Experts relish again and again warned that the whisper is rarely truly staunch privacy, nonetheless moreover the dearth of accountability, technical barriers to growth, and scarcity of diagnosis or even acknowledgement of the opportunity of errors and incorrect positives.

Kendra Albert, a felony respectable on the Harvard Law School’s Cyberlaw Clinic, has warned that “these “youngster protection” parts are going to build up uncommon teens kicked out of their properties, overwhelmed, or worse”, adding:

“I staunch know (calling it now) that these machine finding out algorithms are going to flag transition photos. Stunning success texting your of us a image of you whereas you might perhaps relish “feminine presenting nipples.””

Our Query

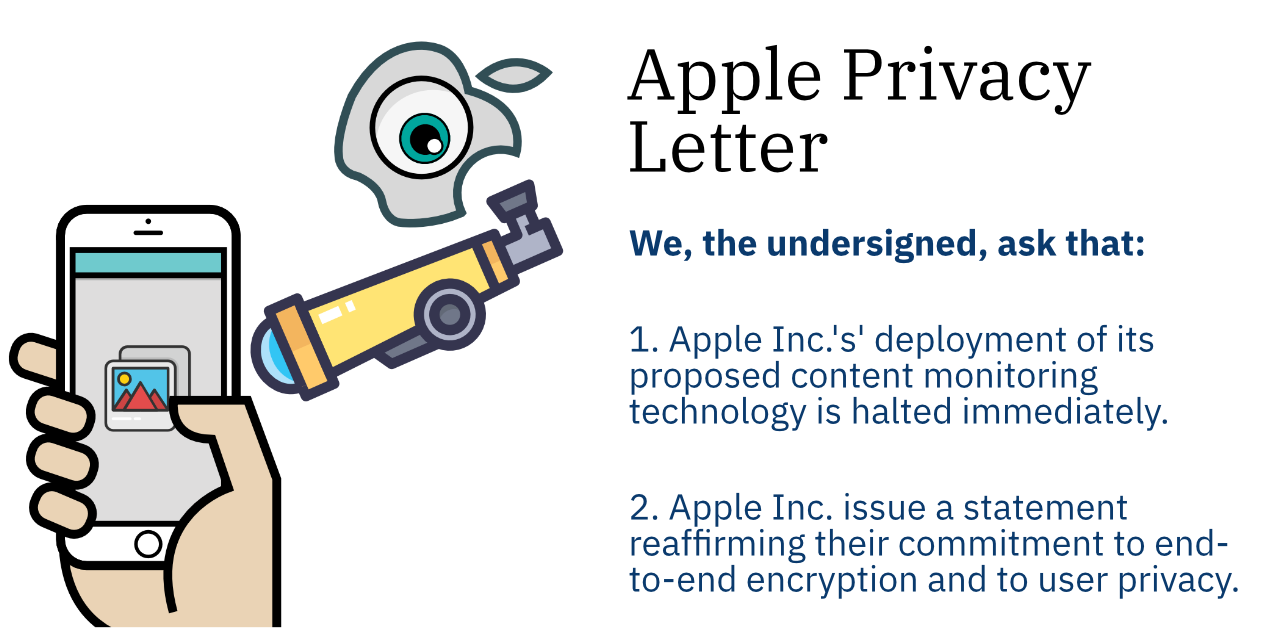

We, the undersigned, are looking ahead to that:

- Apple Inc.’s’ deployment of its proposed content material monitoring abilities is halted staunch now.

- Apple Inc. whisper a assertion reaffirming their dedication to end-to-end encryption and to person privacy.

Apple’s contemporary route threatens to undermine decades of work by technologists, lecturers and protection advocates in the direction of solid privacy-keeping measures being the norm staunch thru a majority of user digital devices and utilize instances. We enlighten that Apple rethink its abilities rollout, lest it undo that vital work.