Apache Cassandra 4.0 arrives with sooner scaling and throughput

The whole intervals from Change into 2021 can be found on-search details from now. Glance now.

Let the OSS Endeavor newsletter guide your originate source lumber! Take a look at in here.

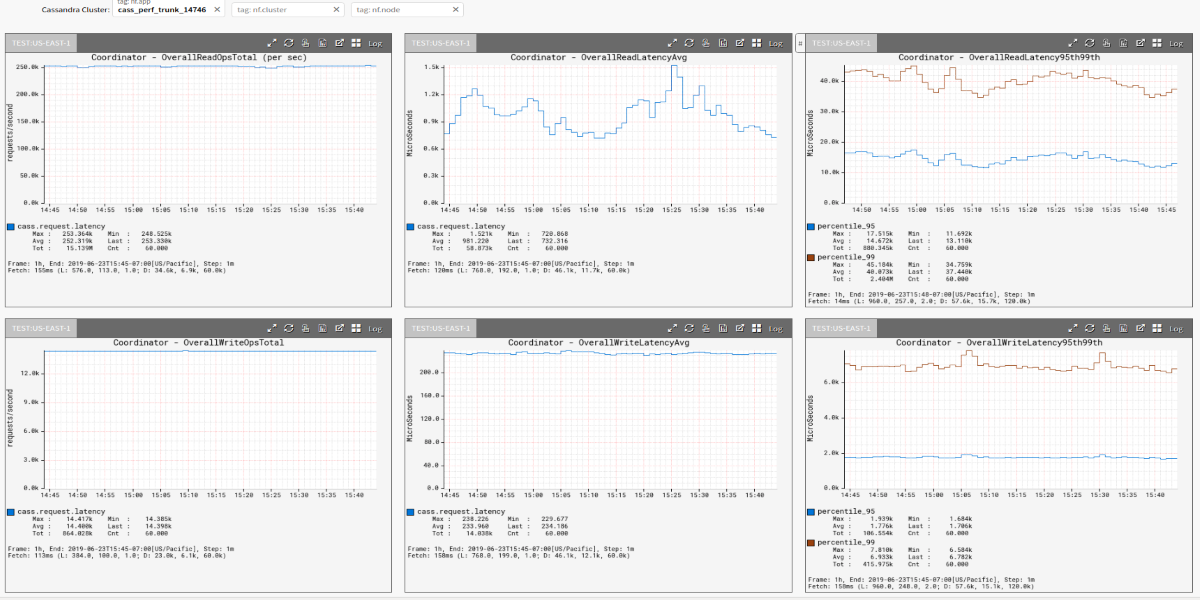

Maintainers of the originate source Apache Cassandra Mission on the unique time launched an replace that can run details as much as 5 times sooner sooner or later of scaling operations while offering as much as 25% sooner throughput on reads and writes. Version 4.0 of the Apache Cassandra database has also been optimized for deployment within the cloud, as smartly as on Kubernetes clusters, acknowledged Ekaterina Dimitrova, a instrument engineer at DataStax, which provides a curated occasion of Cassandra to venture IT organizations.

Diverse added capabilities encompass the facility to take details replicas synchronized to optimize incremental repairs, audit logs for monitoring person access and process with minimal impact to workload performance, more effective configuration settings, enhanced compression, and improved latency accomplished by process of decreased cease time for a rubbish collector that cleans up memory.

At closing, the Apache Cassandra Mission maintainers launched on the unique time they are essentially though-provoking to a yearly launch cycle, with every most main launch to be supported for three years.

Apache Cassandra database replace a prolonged time coming

The most recent version of the Apache Cassandra databases has been in vogue for more than three years. The aim is to simplify the migration process by offering a extremely stable strengthen in desire to a platform which would perchance also in every other case be viewed as a work in progress, acknowledged Dimitrova. “There had been more than 1,000 worm fixes,” she acknowledged.

As allotment of that effort, the Apache Cassandra neighborhood deployed plenty of checking out and quality assurance (QA) initiatives and methodologies sooner or later of the checking out and quality assurance section of the project that enabled the maintainers and contributors to generate reproducible staunch-existence workloads that would perchance also very smartly be examined without needing to cease a workload.

Apache Cassandra as a NoSQL database has gained traction as a replacement to relational databases that weren’t designed to process massive amounts of unstructured details. On the beginning developed by Facebook, Cassandra is basically based entirely on a massive-column retailer that makes it imaginable to successfully process massive amounts of unstructured details spanning hundreds of writes per 2nd with no single point of failure. Facebook donated the database to the Apache Tool Basis in 2009.

Organizations that assemble relate of Cassandra on the unique time encompass Apple, which has deployed more than 160,000 circumstances storing over 100PB of details all over more than 1,000 clusters, and Netflix, which has deployed more than 10,000 circumstances storing 6PB of details all over more than 100 clusters that process more than 1 trillion requests per day. Equally, Bloomberg serves up more than 20 billion requests per day all over a virtually 1PB dataset spanning more than 1,700 Cassandra nodes.

Diverse organizations which accept as true with adopted Apache Cassandra encompass Activision, Backblaze, BazaarVoice, Handiest Rob, CERN, Fixed Contact, Comcast, DoorDash, eBay, Constancy, Hulu, ING, Instagram, Intuit, Macy’s, Macquarie Bank, McDonald’s, the Contemporary York Occasions, Monzo, Outbrain, Pearson Training, Sky, Spotify, Purpose, Uber, Walmart, and Impart.

Cassandra discovering out curve is prolonged

The topic advocates of Cassandra continue to face is that deploying and managing a Cassandra database requires a serious amount of craftsmanship. In so much of circumstances, purposes easiest accumulate their intention off an originate source file database once they bustle out of headroom. Developers don’t continuously know to what stage their purposes would perchance one day must scale. Many of them can configure a file database without any intervention of a database administrator (DBA) required.

Alternatively, a database that can scale as much as process petabytes of unstructured details would perchance also at closing be required. The accurate details is that after a company encounters that command the first time, it’s more vulnerable to bring some stage of Cassandra expertise to undergo on the next application that must be refactored to bustle on a database designed to scale.

VentureBeat

VentureBeat’s mission is to be a digital city sq. for technical resolution-makers to make details about transformative technology and transact.

Our intention delivers mandatory details on details technologies and strategies to guide you as you lead your organizations. We invite you to change into a member of our neighborhood, to access:

- up-to-date details on the issues of hobby to you

- our newsletters

- gated thought-leader tell and discounted access to our prized occasions, comparable to Change into 2021: Be taught More

- networking parts, and more