Apple to scan all iPhones and iCloud accounts for youngster abuse pictures

A scorching potato: Apple has published plans to scan all iPhones and iCloud accounts in the US for youngster sexual abuse fabric (CSAM). While the machine would possibly profit criminal investigations and has been praised by youngster protection teams, there are concerns referring to the capability security and privacy implications.

The neuralMatch machine will scan every image sooner than it is uploaded to iCloud in the US utilizing an on-tool matching assignment. If it believes unlawful imagery is detected, a crew of human reviewers will be alerted. Should always youngster abuse be confirmed, the user’s yarn will be disabled and the US National Heart for Missing and Exploited Children notified.

NeuralMatch was once expert utilizing 200,000 pictures from the National Heart for Missing & Exploited Children. This would well simplest flag pictures with hashes that match these from the database, which technique it mustn’t name innocent fabric.

“Apple’s manner of detecting identified CSAM is designed with user privacy in mind. Pretty than scanning pictures in the cloud, the machine performs on-tool matching utilizing a database of identified CSAM image hashes supplied by NCMEC and other youngster security organizations. Apple additional transforms this database into an unreadable design of hashes that is securely saved on users’ devices,” reads the company’s web web site. It notes that users can enchantment to own their yarn reinstated if they feel it was once mistakenly flagged.

Apple already checks iCloud files in opposition to identified youngster abuse imagery nonetheless extending this to local storage has caring implications. Matthew Green, a cryptography researcher at Johns Hopkins College, warns that the machine will be outdated skool to scan for other files, equivalent to participants who name authorities dissidents. “What occurs when the Chinese authorities says: ‘Here’s a listing of files that we prefer you to scan for,'” he requested. “Does Apple express no? I hope they express no, nonetheless their abilities would possibly no longer express no.”

It’s demanding to me that a bunch of my colleagues, participants I know and admire, don’t feel love these ideas are acceptable. That they ought to dedicate their time and their coaching to guaranteeing that world can never exist all once more.

— Matthew Green (@matthew_d_green) August 5, 2021

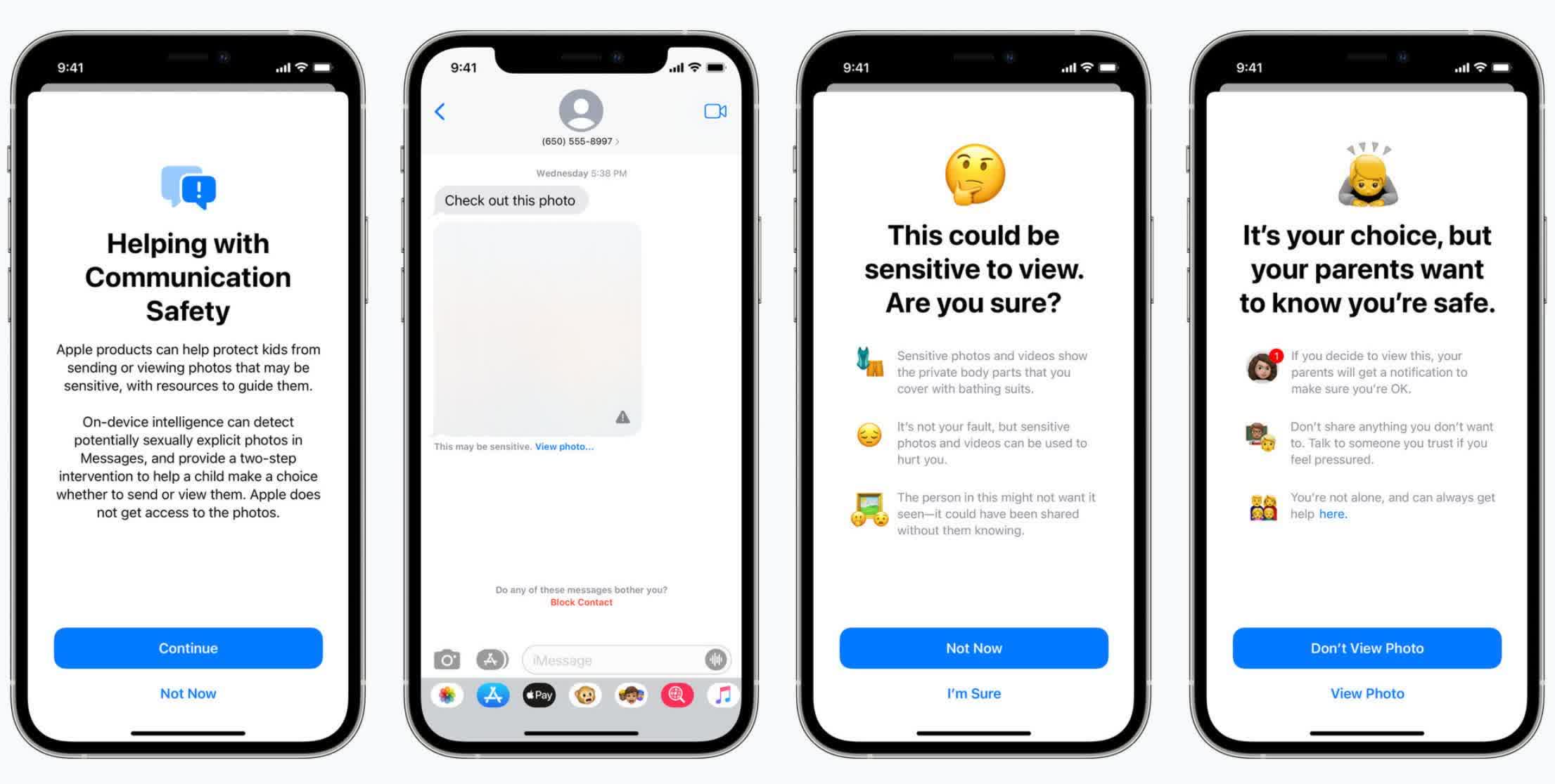

Additionally, Apple plans to scan users’ encrypted messages for sexually enlighten impart material as an adolescent preventive measure. The Messages app will add original tools to warn young participants and their dad and mom when receiving or sending sexually enlighten pictures. However Green additionally stated that any individual would possibly trick the machine into believing an innocuous image is CSAM. “Researchers were in a position to manufacture this incandescent without complications,” he stated. This would per chance allow a malicious actor to frame any individual by sending a reputedly current image that triggers Apple’s machine.

“No topic what Apple’s lengthy time interval plans are, they’ve despatched a in truth obvious signal. In their (very influential) opinion, it is safe to invent programs that scan users’ telephones for prohibited impart material,” Green added. “Whether or no longer they flip out to be appropriate or inaccurate on that time no longer continuously matters. This would per chance simply spoil the dam — governments will demand it from everybody.”

The original aspects come on iOS 15, iPadOS 15, MacOS Monterey, and WatchOS 8, all of which launch this drop.

Masthead credit: NYC Russ