A brand new methodology to put together AI systems may perhaps perhaps presumably clutch them safer from hackers

The context: One in every of the preferrred unsolved flaws of deep studying is its vulnerability to so-known as adversarial assaults. When added to the input of an AI plot, these perturbations, apparently random or undetectable to the human worth, can map issues slouch fully awry. Stickers strategically positioned on a discontinue worth, for example, can trick a self-riding automobile into seeing a trip restrict worth for 45 miles per hour, while stickers on a avenue can confuse a Tesla into veering into the snide lane.

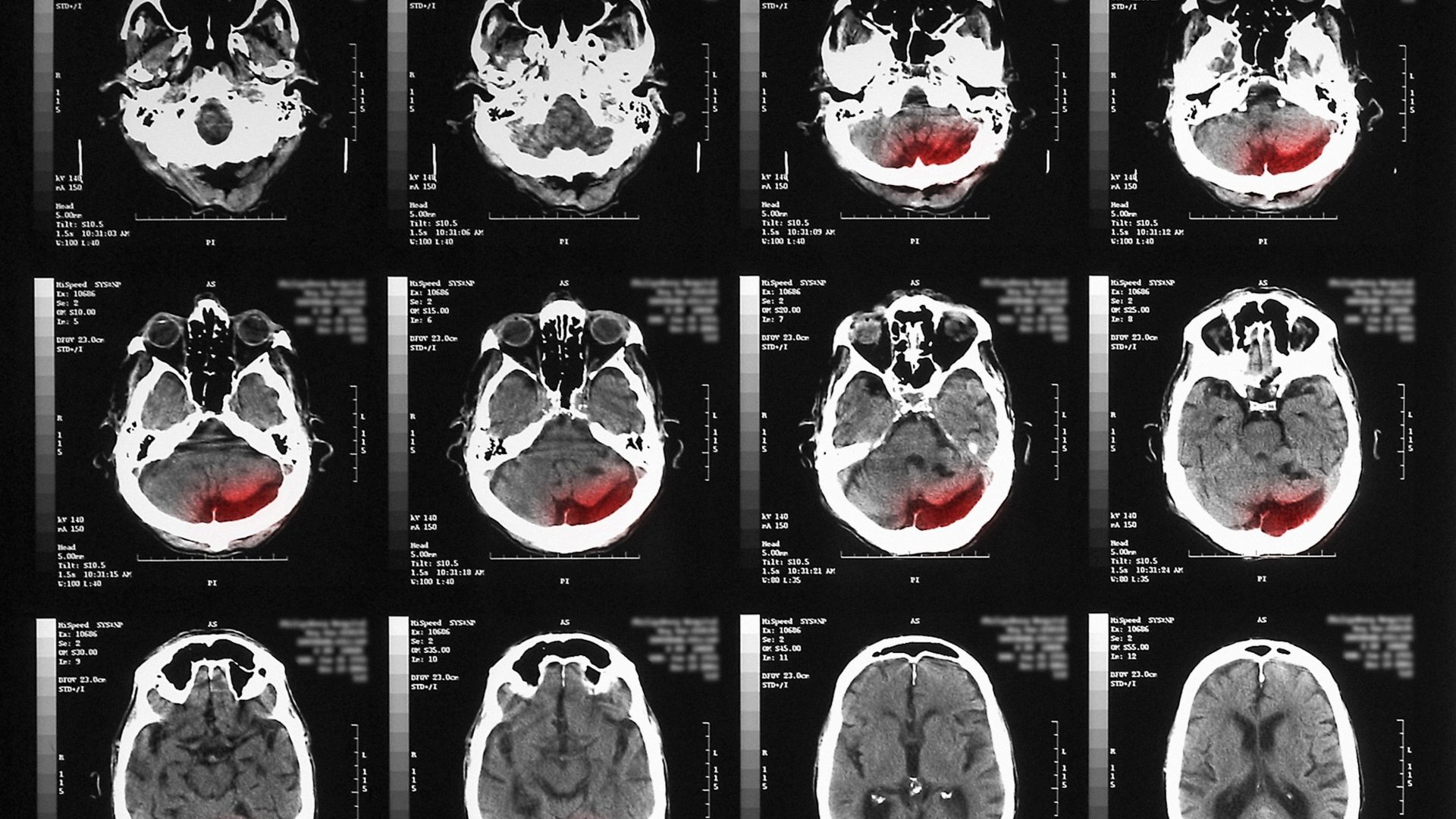

Safety excessive: Most adversarial be taught specializes in image recognition systems, however deep-studying-primarily based fully mostly image reconstruction systems are susceptible too. Here is amazingly troubling in health care, where the latter are frequently feeble to reconstruct medical photos delight in CT or MRI scans from x-ray files. A centered adversarial attack may perhaps perhaps presumably cause this kind of tool to reconstruct a tumor in a scan where there isn’t one.

The be taught: Bo Li (named one in every of this three hundred and sixty five days’s MIT Technology Overview Innovators Below 35) and her colleagues on the College of Illinois at Urbana-Champaign on the second are proposing a brand new methodology for coaching such deep-studying systems to be extra failproof and thus reliable in safety-excessive eventualities. They pit the neural network guilty for image reconstruction in opposition to but any other neural network guilty for generating adversarial examples, in a trend same to GAN algorithms. Through iterative rounds, the adversarial network makes an are trying to fool the reconstruction network into producing issues that aren’t portion of the real files, or ground fact. The reconstruction network consistently tweaks itself to e-book clear of being fooled, making it safer to deploy within the particular world.

The implications: When the researchers examined their adversarially professional neural network on two in trend image files sets, it became ready to reconstruct the bottom fact better than other neural networks that had been “fail-proofed” with diversified systems. The implications nonetheless aren’t excellent, nevertheless, which reveals the methodology nonetheless desires refinement. The work will seemingly be supplied subsequent week on the International Convention on Machine Studying. (Learn this week’s Algorithm for guidelines on how I navigate AI conferences delight in this one.)