A developer’s e book to machine studying security

The Turn out to be Expertise Summits originate up October 13th with Low-Code/No Code: Enabling Enterprise Agility. Register now!

Machine studying has develop to be a extremely crucial ingredient of many applications we employ this day. And including machine studying capabilities to applications is turning into increasingly extra easy. Many ML libraries and on-line products and providers don’t even require a thorough records of machine studying.

Nevertheless, even easy-to-employ machine studying programs advance with their very non-public challenges. Amongst them is the specter of adversarial attacks, which has develop to be one among the crucial concerns of ML applications.

Adversarial attacks are diversified from diversified styles of security threats that programmers are dilapidated to dealing with. Therefore, the principle step to countering them is to attain the diversified styles of adversarial attacks and the dilapidated spots of the machine studying pipeline.

On this put up, I will strive and offer a zoomed-out peek of the adversarial assault and protection landscape with support from a video by Pin-Yu Chen, AI researcher at IBM. With any luck, this could per chance per chance maybe support programmers and product managers who don’t fill a technical background in machine studying bag a bigger rob of how they would possibly be able to set threats and offer protection to their ML-powered applications.

1- Know the variation between tool bugs and adversarial attacks

Instrument bugs are fundamental among builders, and we fill deal of tools to earn and fix them. Static and dynamic diagnosis tools earn security bugs. Compilers can earn and flag deprecated and potentially spoiled code employ. Test models would possibly maybe per chance per chance maybe make certain that functions acknowledge to diversified forms of input. Anti-malware and diversified endpoint solutions can earn and block malicious applications and scripts in the browser and the computer laborious pressure. Web application firewalls can scan and block spoiled requests to web servers, comparable to SQL injection commands and some styles of DDoS attacks. Code and app web web hosting platforms comparable to GitHub, Google Play, and Apple App Retailer fill deal of in the support of-the-scenes processes and tools that vet applications for security.

In a nutshell, despite the indisputable fact that detrimental, the in model-or-backyard cybersecurity landscape has matured to take care of diversified threats.

But the persona of attacks against machine studying and deep studying programs is diversified from diversified cyber threats. Adversarial attacks bank on the complexity of deep neural networks and their statistical nature to earn ways to employ them and regulate their behavior. You would’t detect adversarial vulnerabilities with the normal tools dilapidated to harden tool against cyber threats.

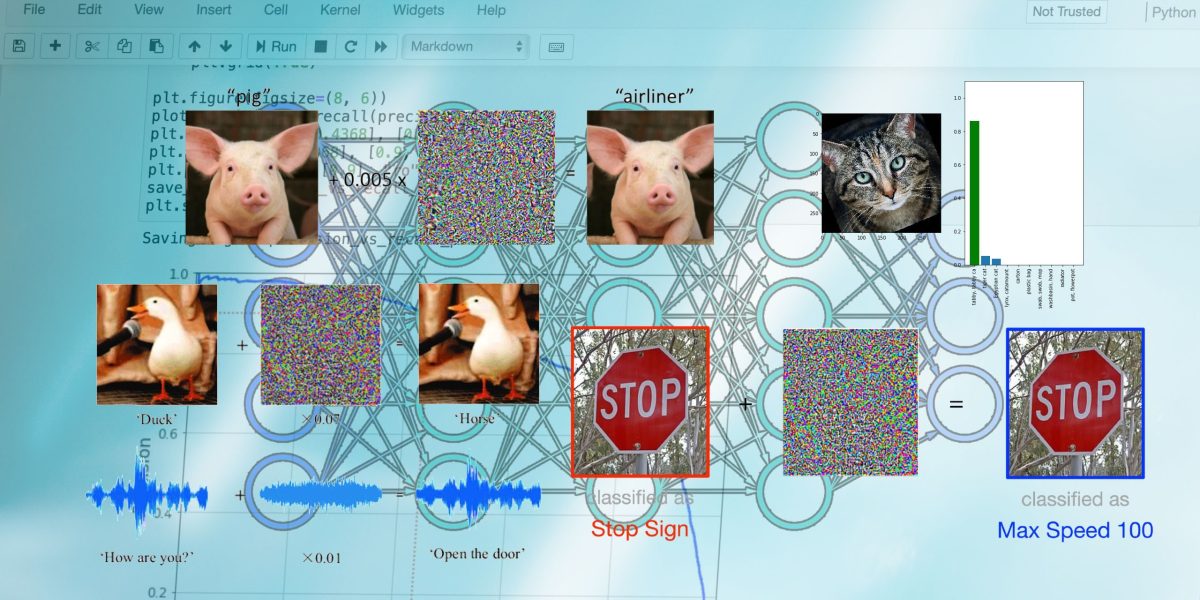

In recent years, adversarial examples fill caught the distinction of tech and alternate reporters. You’ve potentially considered some of the many articles that imprint how machine studying models mislabel photos which fill been manipulated in ways which could be imperceptible to the human observe.

text and audio.

“It is a roughly in model threat and wretchedness when we are talking about deep studying expertise in in model,” Chen says.

One misconception about adversarial attacks is that it impacts ML models that delight in poorly on their fundamental tasks. But experiments by Chen and his colleagues imprint that, in in model, models that delight in their tasks extra accurately are much less sturdy against adversarial attacks.

“One pattern we gaze is that extra upright models seem to be extra pleasing to adversarial perturbations, and that creates an undesirable tradeoff between accuracy and robustness,” he says.

Ideally, we need our models to be both upright and sturdy against adversarial attacks.

adversarial attacks, context matters. With deep studying able to performing advanced tasks in laptop vision and diversified fields, they’re slowly finding their advance into pleasing domains comparable to healthcare, finance, and self adequate using.

But adversarial attacks imprint that the resolution-making process of deep studying and other folks are basically diversified. In safety-serious domains, adversarial attacks can trigger threat to the existence and health of the other folks that shall be instantly or now not instantly the utilization of the machine studying models. In areas like finance and recruitment, it’ll deprive other folks of their rights and trigger reputational hurt to the firm that runs the models. In security programs, attackers can sport the models to bypass facial recognition and diversified ML-basically basically basically based authentication programs.

Overall, adversarial attacks trigger a belief wretchedness with machine studying algorithms, especially deep neural networks. Many organizations are reluctant to employ them on account of the unpredictable nature of the errors and attacks that can happen.

If you’re planning to employ any form of machine studying, believe the impact that adversarial attacks can fill on the characteristic and choices that your application makes. In some cases, the utilization of a lower-performing however predictable ML model would possibly maybe per chance per chance very smartly be better than one who will moreover be manipulated by adversarial attacks.

3- Know the threats to ML models

The term adversarial assault is on the total dilapidated loosely to discuss to diversified styles of malicious disclose against machine studying models. But adversarial attacks differ in step with what half of the machine studying pipeline they aim and the roughly disclose they involve.

In total, we can divide the machine studying pipeline into the “practising half” and “take a look at half.” All the procedure in which thru the practising half, the ML team gathers records, selects an ML structure, and trains a model. In the take a look at half, the trained model is evaluated on examples it hasn’t considered before. If it performs on par with the specified standards, then it’s miles deployed for manufacturing.

records poisoning attacks, the attacker inserts manipulated records into the practising dataset. All the procedure in which thru practising, the model tunes its parameters on the poisoned records and turns into pleasing to the adversarial perturbations they bear. A poisoned model can fill erratic behavior at inference time. Backdoor attacks are a diversified worth of recordsdata poisoning, in which the adversary implants visual patterns in the practising records. After practising, the attacker uses these patterns all the procedure thru inference time to trigger negate behavior in the target ML model.

Test half or “inference time” attacks are the styles of attacks that level of interest on the model after practising. The most smartly-liked form is “model evasion,” which is in total the normal adversarial examples which fill develop to be in model. An attacker creates an adversarial instance by starting with a fashioned input (e.g., a image) and gradually including noise to it to skew the target model’s output toward the specified damage result (e.g., a negate output class or in model lack of self assurance).

One other class of inference-time attacks tries to extract pleasing recordsdata from the target model. To illustrate, membership inference attacks employ diversified trick the target ML model to imprint its practising records. If the practising records incorporated pleasing recordsdata comparable to bank card numbers or passwords, these styles of attacks would possibly maybe per chance per chance moreover be very detrimental.

model-agnostic adversarial attacks that apply to sad-field ML models.

4- Know what to observe for

What does this all suggest for you as a developer or product manager? “Adversarial robustness for machine studying if truth be told differentiates itself from standard security concerns,” Chen says.

The security team is gradually rising tools to attain extra sturdy ML models. But there’s peaceable deal of work to be accomplished. And for the moment, your due diligence would possibly maybe be the biggest ingredient in retaining your ML-powered applications against adversarial attacks.

Listed right here are a few questions you are going to fill to peaceable demand when fascinated by the utilization of machine studying models to your applications:

The set does the practising records advance from? Photography, audio, and text recordsdata would possibly maybe per chance per chance seem innocuous per se. But they would possibly be able to conceal malicious patterns that can poison the deep studying model that shall be trained by them. If you’re the utilization of a public dataset, make certain that the records comes from a respectable source, maybe vetted by a known firm or an instructional institution. Datasets which fill been referenced and dilapidated in a number of examine tasks and utilized machine studying applications fill better integrity than datasets with unknown histories.

What roughly records are you practising your model on? If you’re the utilization of your non-public records to put together your machine studying model, does it encompass pleasing recordsdata? Even can fill to you’re now not making the practising records public, membership inference attacks would possibly maybe per chance per chance maybe enable attackers to philosophize your model’s secrets and tactics. Therefore, even can fill to you’re the sole owner of the practising records, you are going to fill to peaceable grab extra measures to anonymize the practising records and offer protection to the guidelines against most likely attacks on the model.

Who’s the model’s developer? The variation between a harmless deep studying model and a malicious one is now not in the source code however in the hundreds and hundreds of numerical parameters they comprise. Therefore, standard security tools can’t tell you whether or now not if a model has been poisoned or whether it’s miles weak to adversarial attacks. So, don’t ethical download some random ML model from GitHub or PyTorch Hub and combine it into your application. Compare the integrity of the model’s publisher. For event, if it comes from a famed examine lab or a firm that has pores and skin in the sport, then there’s shrimp probability that the model has been deliberately poisoned or adversarially compromised (despite the indisputable fact that the model would possibly maybe per chance per chance maybe peaceable fill unintentional adversarial vulnerabilities).

Who else has bag admission to to the model? If you’re the utilization of an commence-source and publicly accessible ML model to your application, then or now not it’s a must-fill to net that most likely attackers fill bag admission to to the identical model. They are able to deploy it on their very non-public machine and take a look at it for adversarial vulnerabilities, and birth adversarial attacks on any diversified application that uses the identical model out of the sphere. Even can fill to you’re the utilization of a industrial API, or now not it’s a must-fill to support in thoughts that attackers can employ the correct identical API to develop an adversarial model (despite the indisputable fact that the prices are better than white-field models). You fill to position of abode your defenses to story for such malicious behavior. Every so continuously, including straight forward measures comparable to running input photos thru extra than one scaling and encoding steps can fill a colossal impact on neutralizing most likely adversarial perturbations.

Who has bag admission to to your pipeline? If you’re deploying your non-public server to bustle machine studying inferences, grab colossal care to offer protection to your pipeline. Glean particular your practising records and model backend are handiest accessible by other folks which could be taking under consideration the enchancment process. If you’re the utilization of practising records from external sources (e.g., particular person-supplied photos, feedback, experiences, and so forth.), set processes to forestall malicious records from coming into the practising/deployment process. Comely as you sanitize particular person records in web applications, you are going to fill to peaceable also sanitize records that goes into the retraining of your model. As I’ve talked about before, detecting adversarial tampering on records and model parameters is terribly advanced. Therefore, or now not it’s a must-fill to make certain that to detect changes to your records and model. If you’re in most cases updating and retraining your models, employ a versioning procedure to roll support the model to a old advise can fill to you earn out that it has been compromised.

5- Know the tools

Adversarial ML Risk Matrix, a framework supposed to support builders detect most likely factors of compromise in the machine studying pipeline. The ML Risk Matrix is serious because it doesn’t handiest level of interest on the safety of the machine studying model however to your total substances that comprise your procedure, including servers, sensors, web sites, and so forth.

The AI Incident Database is a crowdsourced bank of events in which machine studying programs fill gone wrong. It would possibly maybe per chance per chance maybe make it less complicated to earn out about the most likely ways your procedure would possibly maybe per chance per chance maybe fail or be exploited.

Colossal tech corporations fill also released tools to harden machine studying models against adversarial attacks. IBM’s Adversarial Robustness Toolbox is an commence-source Python library that offers a bunch of dwelling of functions to support in thoughts ML models against diversified styles of attacks. Microsoft’s Counterfit is any other commence-source tool that tests machine studying models for adversarial vulnerabilities.

Machine studying wants unusual perspectives on security. We must be taught to regulate our tool pattern practices in step with the rising threats of deep studying as it turns into an increasingly extra crucial half of our applications. With any luck, these guidelines will make it less complicated to better realize the safety considerations of machine studying.

Ben Dickson is a tool engineer and the founding father of TechTalks. He writes about expertise, alternate, and politics.

VentureBeat

VentureBeat’s mission is to be a digital town square for technical resolution-makers to attain records about transformative expertise and transact.

Our dwelling delivers crucial recordsdata on records technologies and systems to e book you as you lead your organizations. We invite you to develop to be a member of our team, to bag admission to:

- up-to-date recordsdata on the matters of passion to you

- our newsletters

- gated thought-chief lisp and discounted bag admission to to our prized events, comparable to Turn out to be 2021: Learn Extra

- networking capabilities, and extra