Apple is delaying its child security aspects

Apple says it’s delaying the rollout of Dinky one Sexual Abuse Enviornment topic (CSAM) detection instruments “to make improvements” following pushback from critics. The aspects consist of one who analyzes iCloud Photos for known CSAM, which has triggered enviornment amongst privacy advocates.

“Final month we launched plans for aspects intended to wait on defend teens from predators who exercise dialog instruments to recruit and exploit them, and restrict the spread of Dinky one Sexual Abuse Enviornment topic,” Apple informed 9to5Mac in an announcement. “Primarily basically based on suggestions from possibilities, advocacy groups, researchers and others, now we have confidence made up our minds to snatch beyond regular time over the arriving months to fetch enter and make improvements sooner than releasing these severely predominant child security aspects.”

Apple deliberate to roll out the CSAM detection methods as phase of upcoming OS updates, namely iOS 15, iPadOS 15, watchOS 8 and macOS Monterey. The firm is predicted to liberate these within the arriving weeks. Apple did no longer move into ingredient relating to the improvements it can perchance per chance per chance make. Engadget has contacted the firm for observation.

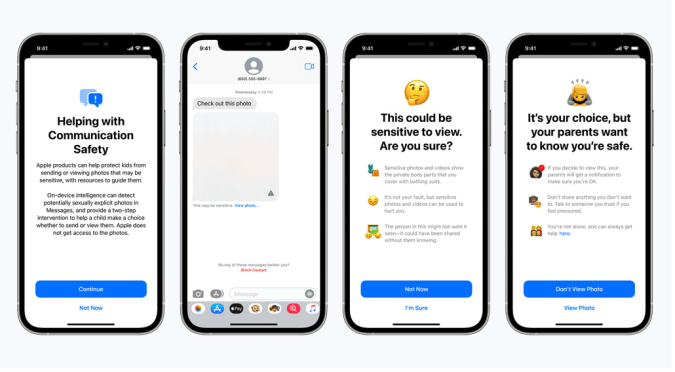

The deliberate aspects incorporated one for Messages, which would say teens and their people when Apple detects that sexually explicit photos had been being shared within the app the utilization of on-tool machine discovering out methods. Such photos sent to teens may per chance perchance per chance per chance be blurred and consist of warnings. Siri and the built-in search capabilities on iOS and macOS will pronounce point customers to appropriate sources when any individual asks tricks on how to file CSAM or tries to create CSAM-connected searches.

Apple

The iCloud Photos tool may per chance perchance per chance per chance be the most controversial of the CSAM detection aspects Apple launched. It plans to make exercise of an on-tool machine to match photos in opposition to a database of known CSAM portray hashes (a more or less digital fingerprint for such photos) maintained by the National Heart for Lacking and Exploited Young of us and other organizations. This analysis is supposed to occur sooner than an image is uploaded to iCloud Photos. Were the machine to detect CSAM and human reviewers manually confirmed a match, Apple would disable the person’s fable and ship a file to NCMEC.

Apple claimed the blueprint would present “privacy advantages over present methods since Apple easiest learns about customers’ photos if they’ve a series of known CSAM in their iCloud Photos fable.” Nonetheless, privacy advocates had been up in palms relating to the deliberate stream.

Some indicate that CSAM portray scanning would perchance result in regulation enforcement or governments pushing Apple to be taught about for other forms of photos to perchance, as an instance, clamp down on dissidents. Two Princeton University researchers who shriek they built a an identical machine called the tech “abominable.” They wrote that, “Our machine would perchance perchance be with out agonize repurposed for surveillance and censorship. The invent wasn’t restricted to a explicit class of philosophize; a carrier would perchance merely swap in any philosophize-matching database, and the person the utilization of that carrier may per chance perchance per chance per chance be none the wiser.”

Critics time and again is known as out Apple for it appears that going in opposition to its lengthy-held stance of upholding person privacy. It famously refused to free up the iPhone inclined by the 2016 San Bernardino shooter, kicking off a correct battle with the FBI.

Apple stated in mid-August that unhappy dialog led to confusion relating to the aspects, which it launched appropriate over a week beforehand. The firm’s senior vice chairman of instrument engineering Craig Federighi current the image scanning machine has “multiple ranges of auditability.” Even so, Apple’s rethinking its formula. It hasn’t launched a fresh timeline for rolling out the aspects.

All merchandise suggested by Engadget are selected by our editorial team, autonomous of our parent firm. Just a few of our experiences consist of affiliate links. When you remove something via one amongst these links, we are able to also fair create an affiliate commission.