Facebook developes a formulation of detecting and attributing deepfakes

Deepfakes were around for a whereas now, however currently they’ve turn out to be so realistic that it’s laborious to tell a deepfake from video. For folks who might be peculiar, a deepfake takes the face and sigh of a notorious individual and creates a video of that individual pronouncing or doing things they’ve never truly performed. Deepfakes are most pertaining to for a lot of when frail at some level of elections as they’ll sway voters by making it seem that one candidate has performed or acknowledged something they never truly did.

Facebook has introduced that it has worked with researchers at Michigan Articulate College (MSU) to manufacture a formulation of detecting and treating deepfakes. Facebook says the novel methodology relies on reverse engineering, which works backward from a single AI-generated image to witness the generative model frail to make it. Great of the level of hobby on deepfakes is detection to search out out if an image is staunch or manufactured.

Assorted than detecting deepfakes, Facebook says researchers might also develop image attribution, figuring out what articulate generative model changed into frail to make the deepfake. Nonetheless, the limiting ingredient in image attribution is that most deepfakes are created using models that weren’t seen at some level of coaching and are simply flagged as being created by unknown models at some level of image attribution.

Facebook and MSU researchers enjoy taken image attribution further by serving to to deuce info about a articulate generative model in line with deepfakes that it has produced. The learn marks the main time it’s been capability to name properties of the model frail to make the deepfake with out a need prior info of any articulate model.

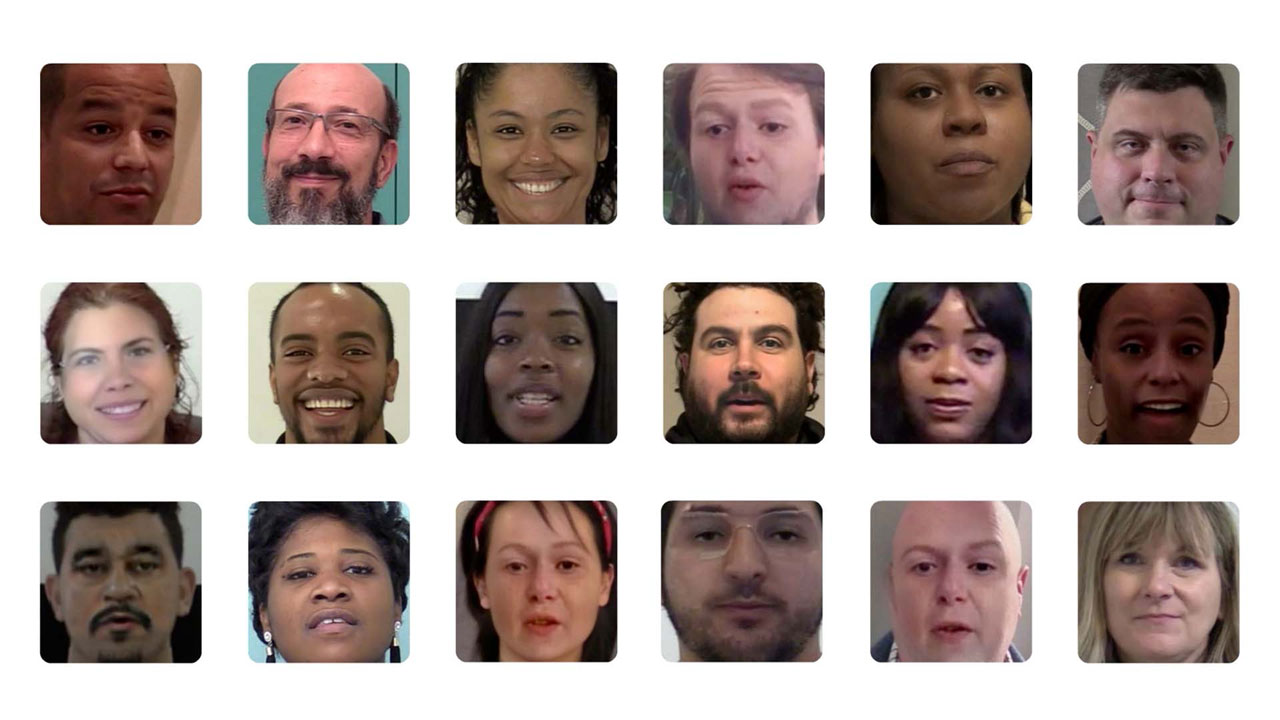

The novel model parsing methodology enables researchers to construct extra info relating to the model frail in creating the deepfake and is in particular functional in true-world settings. Again and again the top possible info that researchers working to detect deepfakes enjoy is the deepfake itself. The ability to detect deepfakes generated from the identical AR model is functional for uncovering cases of coordinated disinformation or malicious assaults that depend upon deepfakes. The system starts by running the deepfake image through a fingerprint estimation community, uncovering runt print left late by the generative model.

Those fingerprints are outlandish patterns left on photos created by a generative model that might be frail to name the put the image got right here from. The crew build together a spurious image info location with 100,000 synthetic photos generated from 100 publicly obtainable generative models. Results from trying out showed that the novel plot performs better than past methods, and the crew changed into in a situation to scheme the fingerprint serve to the distinctive image jabber material.