MetricsDB: TimeSeries Database for storing metrics at Twitter

We lined Observability Engineering’s excessive level overview in blog posts earlier right here and its put together up right here. Our time sequence metric ingestion service grew to bigger than 5 billion metrics per minute, stores 1.5 petabytes of logical time sequence recordsdata, and handles 25Okay inquire requests per minute. Traditionally, we aged Recent york, our key price store, as the storage backend. Observability had the ideally suited Recent york cluster in phrases of storage and requests per minute, and that scale grew to turn out to be a limiting bid to toughen a good deal of use cases requested by our possibilities. We tried varied momentary mitigation approaches, corresponding to batching writes and coalescing connected metrics together to address those complications. These modifications helped to make stronger operability in the short term, but scaling persisted to be a subject. We explored alternate OSS storage alternate strategies with two targets in thoughts. First, to greater inspire our most up-to-date scale and the scale that we’d organically develop into over the next five years. Second, to produce facets that we lacked, corresponding to toughen for sub-minutely and extra tags on metrics. We weren’t in a position to finding an staunch risk that meets the requirements, and made up our minds to construct our possess the use of the compression algorithm described in Facebook’s in reminiscence TSDB: Gorilla. We went down that route and productionized the preliminary version of our unique backend, MetricsDB, on the cease of 2017.

This Tweet is unavailable

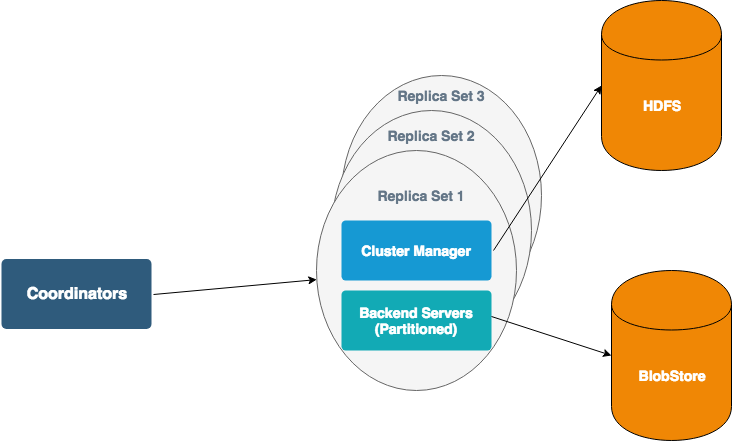

MetricsDB has 3 predominant ingredients:

- ClusterManager: Each and each procedure of partitions has its possess ClusterManager that is accountable for assigning partitions to backend servers. HDFS is aged to store mappings from partitions to servers. The ClusterManager is accountable for redistributing partitions as soon as we add capability. Coordinators and BackendServers acquire updates from the ClusterManager when the mapping modifications — they cease not read from HDFS in an instant. The ClusterManager also does health tests on backend servers, and is aged to coordinate deploys of the backend servers. We replace a backend server only after making sure that a good deal of replicas proudly owning same partitions are healthy.

- BackendServers: BackendServers are accountable for processing metrics for a little replacement of partitions. Each and each BackendServer retains the most up-to-date two hours of recordsdata for all metrics in reminiscence. The BackendServer also caches older recordsdata for recurrently accessed metrics and time ranges. The servers checkpoint in-reminiscence recordsdata every two hours to durable storage, Blobstore. We are the use of Blobstore as durable storage in advise that our process may perchance perhaps perhaps well also very well be hasten on our shared compute platform with decrease management overhead.

- Coordinators: Coordinators are accountable for routing requests to all reproduction units and validates desired quorum constraints are met.

Multi-zone toughen: Our old recordsdata store, Recent york, used to be not zone mindful and compelled us to maintain a pair of datasets for every of the zones that we supported. MetricsDB is multi-zone compliant and handles metrics from a lot of a good deal of zones. We on the 2nd hasten MetricsDB only in our predominant zones. Persevered recordsdata is replicated to a good deal of zones thru Blobstore’s constructed-in replication.

Partitioning of metrics: Observability is a write-heavy workload. Only ~2% of metrics written are ever read. It will be well-known to toughen a ways more metrics, at finer granularity, for rapid figuring out and resolving incidents. We know from our expertise with Recent york that sending one metric in every demand brings scaling challenges. We address this scenario by the use of a custom partitioning plan to batch a write demand from “sequence agent” the general technique to storage system. This put together partition plan uses fixed hashing on (zone+service+source) to route requests to particular logical backend partition and reduces the replacement of individual metric write requests per minute from over 5 billion to under 10 million. This also distributes requests evenly so gorgeous services and little services can co-exist and half shards, avoiding the overhead of having custom configuration to procedure up a good deal of numbers of shards for diverse services. In manufacturing, we are in a position to cease a extremely low variance of 200 requests per minute between excessive loaded partition and least-loaded partition.

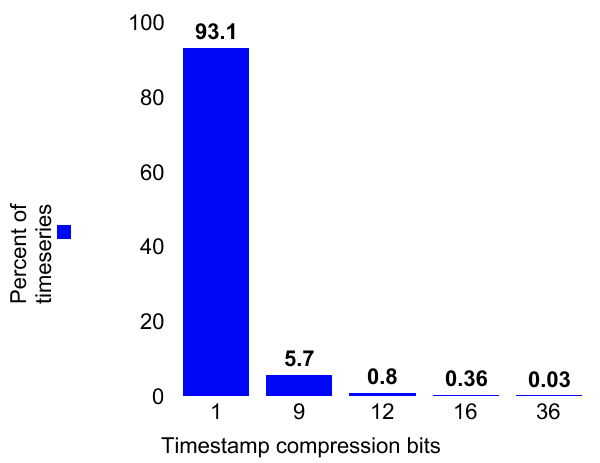

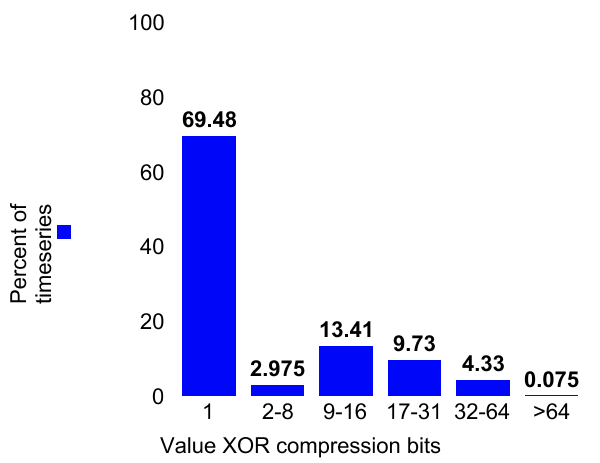

Compression effectivity : The Gorilla white paper identifies compression algorithms which may perchance perhaps perhaps well also perchance be very efficient for observability recordsdata and cease 95% dwelling compression. We have implemented these algorithms and carried out identical finally ends up in manufacturing.

This Tweet is unavailable

Ninety-three p.c of timestamps may perchance perhaps perhaps well also very well be saved in 1 bit and nearly 70% of metric values may perchance perhaps perhaps well also very well be saved in 1 bit. Further, only ~15% of metrics require bigger than 2 bytes for storing values. Overall, this helped decrease storage utilization by 1.2PB.

This Tweet is unavailable

Rollout challenges:

Consistency:

MetricsDB has replication bid of three. We in the starting put designed the system to require on the least two winning writes to acknowledge a write and planned to read from only a single reproduction. We selected single-reproduction reads blueprint with assumption that the engineers the use of monitoring stack in overall care about trends and aggregates of metrics (demand depend or success fee) in desire to individual recordsdata aspects. Nonetheless, we stumbled on that many possibilities procedure up indicators on missing recordsdata to title an unhealthy service. These missing recordsdata indicators would fire if the reproduction that responds first doesn’t fill the records for the more recent minute. We swap to quorum reads (2/3) to address this scenario.

There used to be an additional scenario with inconsistencies in aggregates. Here’s seen when write failed in two of the replicas after a pair of retries. On this case, only one reproduction can fill the records for a disclose metric. Aggregates will present a definite price when that reproduction is integrated vs when it is a ways not. It used to be not acceptable to wait on for all three backend responses for all requests because this would reason excessive tail latencies. To workaround this scenario, we equipped a two-level timeouts. MetricsDB coordinators fill decrease ‘first-level timeout’ to wait on for all three replicas’ response. When that timeout expires, the coordinator will return if it has received responses from on the least two replicas. There is a 2nd timeout that can reason and right failure to be returned if now we fill silent not gotten two responses. This has elevated p99 latency marginally (latency is silent an advise of magnitude greater than key price store), but made the records more fixed. We are in the strategy of together with greater reproduction reconciliation the use of Kafka queues to make stronger the consistency extra.

Running MetricsDB on a multi-tenant compute cluster:

We started MetricsDB on our shared compute cluster to every bustle time-to-market and to diminish operational overhead for the group. MetricsDB used to be the principle stateful service to hasten a shared compute platform at Twitter. Running on a dedicated cluster used to be not an risk for the reason that dimension of the cluster would were too little to be acquire. We wouldn’t fill had enough rack differ and a single server failing would were detrimental to the service availability.

On a shared compute cluster, an instance may perchance perhaps perhaps well also very well be drained or restarted at any time for upkeep by the Compute group. This resulted in availability complications if two or more replicas are drained on the same time because our compute group used to be unaware of MetricsDB topology. We in the starting put resorted to manual coordination and ad hoc tooling to address the scenario. The Compute group helped us by together with toughen for stateful services corresponding to Coordinator basically basically based SLA and SLA Mindful updates.

This Tweet is unavailable

Service level aggregates:

Whereas MetricsDB has lowered latency by a component of 5, doing read-time aggregation for a service with an attractive replacement of sources with a low latency used to be not easy. Shall we command, loading dashboard with time sequence recordsdata for bigger than 10,000 sources over two weeks of recordsdata generally timed out. To make such queries more responsive, we added a unique service that does write-time aggregation known as “counters”. Counters architecture has two major modifications:

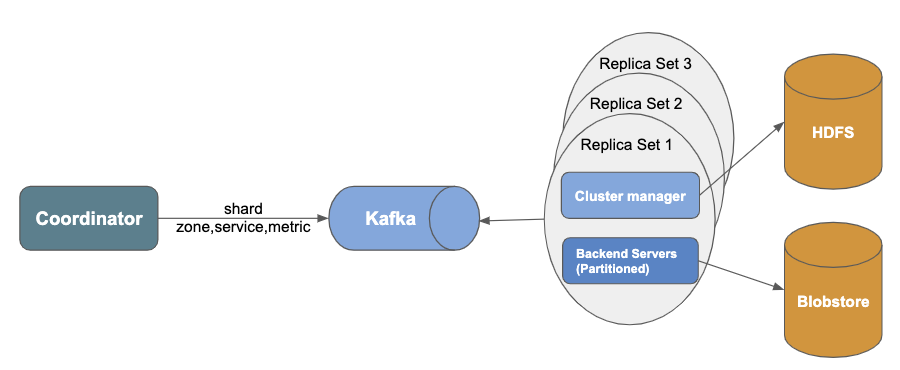

Better consistency

Files inconsistency is a bigger scenario with aggregates than with raw recordsdata aspects. Replicas can miss partial recordsdata and compute a good deal of mixture price for an identical timestamp. These are very not more seemingly to reconcile on the read route. To clear up this, we equipped Apache Kafka between coordinator and backend servers. We also removed AppendLog from counter cluster because Kafka acts as AppendLog.

Recent partitioning plan

As described earlier, for raw recordsdata aspects, we aged partitioning in step with (zone, service, source). To mixture metrics per service, we equipped a unique partitioning plan (zone, service, metric) in advise that a metric that belongs to the same service lands on the same partition. This affords greater recordsdata locality for doing aggregations. In put together, this also gave marginally greater balancing than (zone, service, source) partitioning. Nonetheless, metric-basically basically based partitioning introduced more challenges in ingestion route than source-basically basically based partitioning, because a write demand from sequence agent needs to be split into a pair of requests in step with partitioning plan. Apache Kafka producer has an risk to batch requests, which helped us decrease the replacement of requests to the queue and storage.

This Tweet is unavailable

Within the principle version, we present most classic aggregations, ‘SUM’ and ‘COUNT’ for all metrics in step with service. We notion to toughen a good deal of kinds of aggregations in come future.

This Tweet is unavailable

Summary

Utilizing a custom storage backend in feature of venerable key price store lowered the general ticket by a component of 10. This also lowered latency by a component of 5. More importantly, this enables us so as to add a good deal of facets that require greater scale. Besides, write time aggregations in step with service improved responsiveness vastly while cutting again the burden on MetricsDB. We notion so as to add toughen for dimensions, sub-minutely granularity soon.

This Tweet is unavailable

Acknowledgements

We may perchance perhaps perhaps well not fill carried out this with out the laborious work and dedication of everybody in Infrastructure engineering. Particular due to the Alex Angelo, Charlie Carson, Honggang Zhang, Muhammed Serdar Soran, Ning Wang, Ravi Kapuganty, Vishnu Challam

This Tweet is unavailable