Reinforcement studying improves game discovering out, AI crew finds

Be half of gaming leaders online at GamesBeat Summit Subsequent this upcoming November 9-10. Learn more about what comes next.

As game worlds grow more unheard of and complicated, making sure they’re playable and malicious program-free is turning into an increasing form of mighty for builders. And gaming firms are shopping for imprint spanking unique instruments, including artificial intelligence, to serve overcome the mounting topic of discovering out their products.

A unique paper by a crew of AI researchers at Digital Arts presentations that deep reinforcement studying agents can serve test games and build definite they’re balanced and solvable.

“Adversarial Reinforcement Studying for Procedural Utter material Era,” the methodology presented by the EA researchers, is a unique plan that addresses a few of the shortcomings of old AI methods for discovering out games.

Testing immense game environments

Webinar

Three top funding pros originate up about what it takes to salvage your video game funded.

“At the present time’s grand titles can contain better than 1,000 builders and customarily ship depraved-platform on PlayStation, Xbox, cell, and so forth.,” Linus Gisslén, senior machine studying analysis engineer at EA and lead creator of the paper, advised TechTalks. “Additionally, with the most modern model of originate-world games and are living service we see that a quantity of shriek material must be procedurally generated at a scale that we beforehand contain not seen in games. All this introduces a quantity of ‘transferring aspects’ which all can manufacture bugs in our games.”

Builders contain currently two fundamental instruments at their disposal to study their games: scripted bots and human play-testers. Human play-testers are very acceptable at discovering bugs. But they would maybe even be slowed down immensely when going via unheard of environments. They may possibly maybe presumably also salvage bored and distracted, particularly in a truly grand game world. Scripted bots, on the opposite hand, are like a flash and scalable. But they’ll’t match the complexity of human testers and they also accept as true with poorly in immense environments comparable to originate-world games, the place mindless exploration isn’t basically a successful procedure.

“Our purpose is to use reinforcement studying (RL) as a procedure to merge the advantages of humans (self-studying, adaptive, and novel) with scripted bots (like a flash, cheap and scalable),” Gisslén acknowledged.

Reinforcement studying is a department of machine studying all the plan in which via which an AI agent tries to take actions that maximize its rewards in its ambiance. As an illustration, in a game, the RL agent begins by taking random actions. Based mostly fully mostly on the rewards or punishments it receives from the ambiance (staying alive, losing lives or successfully being, earning facets, finishing a stage, and so forth.), it develops an hasten protection that ends in the genuine outcomes.

Testing game shriek material with adversarial reinforcement studying

Within the previous decade, AI analysis labs contain fashioned reinforcement studying to grasp complicated games. More at the moment, gaming firms contain also change into drawn to using reinforcement studying and other machine studying tactics in the sport construction lifecycle.

As an illustration, in game-discovering out, an RL agent may possibly even be trained to learn a game by letting it play on new shriek material (maps, levels, and so forth.). Once the agent masters the sport, it’ll serve procure bugs in unique maps. The topic with this vogue is that the RL system in most cases ends up overfitting on the maps it has seen all the plan in which via coaching. This implies that this may possibly change into very acceptable at exploring these maps but terrible at discovering out unique ones.

The methodology proposed by the EA researchers overcomes these limits with “adversarial reinforcement studying,” a procedure inspired by generative adversarial networks (GAN), a form of deep studying architecture that pits two neural networks against every other to manufacture and detect artificial records.

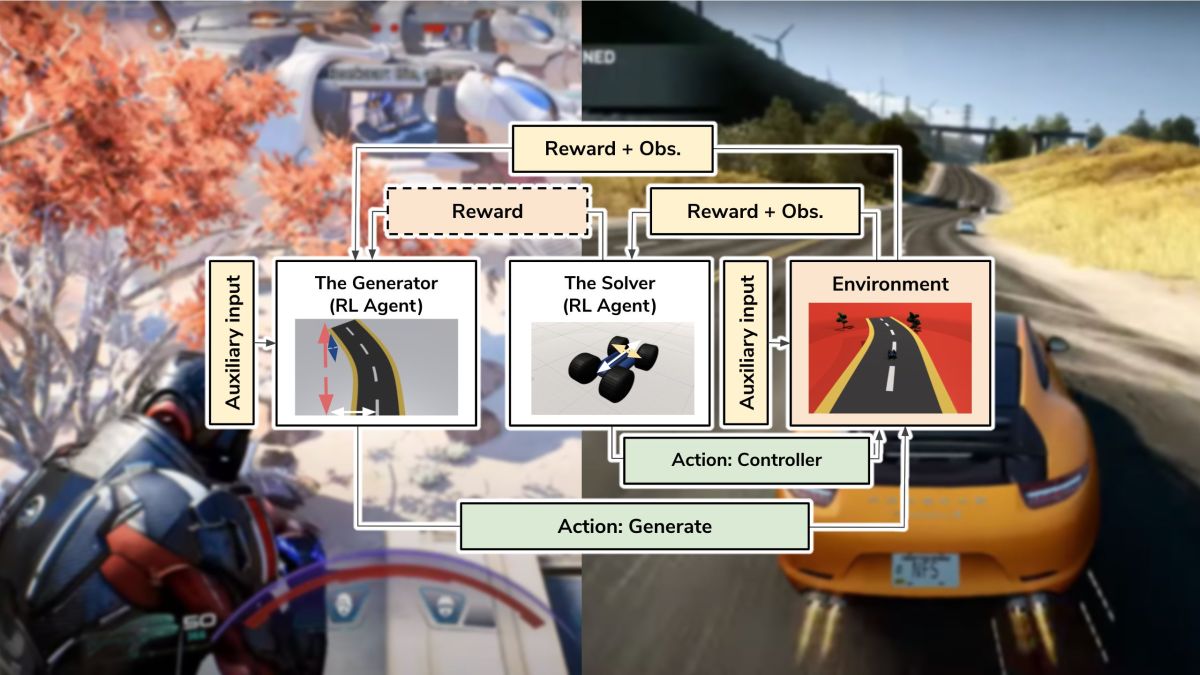

In adversarial reinforcement studying, two RL agents compete and collaborate to manufacture and test game shriek material. The predominant agent, the Generator, makes use of procedural shriek material generation (PCG), a procedure that mechanically generates maps and other game formulation. The 2nd agent, the Solver, tries to avoid wasting the levels the Generator creates.

There’s a symbiosis between the two agents. The Solver is rewarded by taking actions that serve it waddle the generated levels. The Generator, on the opposite hand, is rewarded for rising levels which would be tense but not not attainable to avoid wasting for the Solver. The suggestions that the two agents present every other permits them to alter into better at their respective tasks because the coaching progresses.

The generation of levels takes quandary in a step-by-step vogue. As an illustration, if the adversarial reinforcement studying system is being fashioned for a platform game, the Generator creates one game block and strikes on to the next one after the Solver manages to construct it.

“Utilizing an adversarial RL agent is a vetted methodology in other fields, and is often wanted to enable the agent to construct its beefy doubtless,” Gisslén acknowledged. “As an illustration, DeepMind fashioned a model of this when they let their Paddle agent play against various versions of itself in portray to acquire tremendous-human outcomes. We use it as a tool for tense the RL agent in coaching to alter into more overall, which methodology that this may possibly presumably even be more mighty to changes that happen in the ambiance, which is often the case in game-play discovering out the place an ambiance can alternate on a everyday basis.”

Progressively, the Generator will learn to manufacture a diversity of solvable environments, and the Solver will change into more versatile find out various environments.

A mighty game-discovering out reinforcement studying system may possibly even be very beneficial. As an illustration, many games contain instruments that enable avid gamers to manufacture their contain levels and environments. A Solver agent that has been trained on a diversity of PCG-generated levels shall be plan more efficient at discovering out the playability of client-generated shriek material than extinct bots.

One in all the intriguing facts in the adversarial reinforcement studying paper is the introduction of “auxiliary inputs.” Here is an aspect-channel that is affecting the rewards of the Generator and permits the sport builders to manipulate its discovered habits. Within the paper, the researchers portray how the auxiliary enter may possibly even be fashioned to manipulate the topic of the levels generated by the AI system.

EA’s AI analysis crew utilized the methodology to a platform and a racing game. Within the platform game, the Generator step by step locations blocks from the starting order the purpose. The Solver is the player and must soar from block to dam unless it reaches the purpose. Within the racing game, the Generator locations the segments of the discover, and the Solver drives the car to the save line.

The researchers portray that by utilizing the adversarial reinforcement studying system and tuning the auxiliary enter, they had been in a space to manipulate and adjust the generated game ambiance at various levels.

Their experiments also portray that a Solver trained with adversarial machine studying is plan more mighty than extinct game-discovering out bots or RL agents which had been trained with mounted maps.

Making use of adversarial reinforcement studying to genuine games

The paper doesn’t present an intensive explanation of the architecture the researchers fashioned for the reinforcement studying system. The dinky data that is in there presentations that the the Generator and Solver use straightforward, two-layer neural networks with 512 objects, which mustn’t be very costly to practice. On the opposite hand, the instance games that the paper contains are comparatively straightforward, and the architecture of the reinforcement studying system must fluctuate looking out on the complexity of the ambiance and hasten-topic of the target game.

“We have a tendency to take a pragmatic plan and try to retain the coaching designate at a minimum as this must be a viable option via ROI for our QV (Quality Verification) teams,” Gisslén acknowledged. “We try to retain the capability fluctuate of every trained agent to lawful consist of one capability/purpose (e.g., navigation or target replacement) as having more than one expertise/needs scales very poorly, causing the devices to be very expensive to practice.”

The work is silent in the analysis stage, Konrad Tollmar, analysis director at EA and co-creator of the paper, advised TechTalks. “But we’re having collaborations with a quantity of game studios across EA to explore if that is a viable plan for his or her wants. Total, I’m actually optimistic that ML is a procedure that shall be a long-established tool in any QV crew in the raze in some shape or build,” he acknowledged.

Adversarial reinforcement studying agents can serve human testers point of curiosity on evaluating aspects of the sport that can’t be tested with automatic systems, the researchers imagine.

“Our imaginative and prescient is that we are in a position to unlock the opportunity of human playtesters by transferring from mundane and repetitive tasks, like discovering bugs the place the avid gamers can salvage stuck or descend via the floor, to more intriguing use-cases like discovering out game-balance, meta-game, and ‘funness,’” Gisslén acknowledged. “These are things that we don’t see RL agents doing in the attain future but are immensely crucial to games and game manufacturing, so we don’t are making an strive to use human sources doing long-established discovering out.”

The RL system can change true into an main fragment of rising game shriek material, as this may possibly enable designers to review the playability of their environments as they manufacture them. In a video that accompanies their paper, the researchers portray how a stage vogue designer can salvage serve from the RL agent in genuine-time while inserting blocks for a platform game.

Sooner or later, this and other AI systems can change true into an main fragment of shriek material and asset creation, Tollmar believes.

“The tech is silent unique and we silent contain a quantity of labor to be finished in manufacturing pipeline, game engine, in-residence journey, and so forth. sooner than this may possibly fully take off,” he acknowledged. “On the opposite hand, with the most modern analysis, EA shall be ready when AI/ML turns true into a mainstream technology that is fashioned across the gaming enterprise.”

As analysis in the discipline continues to shut, AI can in the end play a more crucial characteristic in other aspects of game construction and gaming journey.

“I assert because the technology matures and acceptance and journey grows internal gaming firms this may possibly presumably even be not finest something that is fashioned internal discovering out but also as game-AI whether or not it is a long way collaborative, opponent, or NPC game-AI,” Tollmar acknowledged. “An fully trained discovering out agent can clearly even be imagined being a persona in a shipped game that it is doubtless you’ll maybe be in a space to play against or collaborate with.”

Ben Dickson is a tool engineer and the founder of TechTalks. He writes about technology, enterprise, and politics.

This myth at the delivery looked on Bdtechtalks.com. Copyright 2021

GamesBeat

GamesBeat’s creed when retaining the sport enterprise is “the place ardour meets enterprise.” What does this mean? We’re making an strive to portray you how the data issues to you — not lawful as a resolution-maker at a game studio, but also as keen on games. Whether or not you read our articles, eavesdrop on our podcasts, or seek our videos, GamesBeat will reduction you to search out out about the enterprise and journey enticing with it.

How will you save that? Membership contains entry to:

- Newsletters, comparable to DeanBeat

- The very honest appropriate, tutorial, and fun audio system at our events

- Networking opportunities

- Particular members-finest interviews, chats, and “originate workplace” events with GamesBeat workers

- Talking to crew members, GamesBeat workers, and other guests in our Discord

- And even most doubtless a fun prize or two

- Introductions to like-minded events