The next generation of wearables will be a privacy minefield

Nonetheless whenever you happen to’re among these who assume Fb already is conscious of too grand about our lives, you’re doubtlessly more than a puny bit jumpy by the foundation of Fb having a semi-permanent presence for your proper face.

Fb

Fb, to its credit, is attentive to this. The corporate printed a prolonged blog post on all of the ways it’s taking privacy into consideration. Shall we embrace, it says workers who put on the glasses will be effortlessly identifiable and could maybe peaceful be educated in “appropriate divulge.” The corporate can even encrypt files and blur faces and license plates. It guarantees the recommendations it collects “could not be damaged-down to pronounce the ads folks look for across Fb’s apps,” and handiest authorized researchers will be in a position to receive admission to it.

Nonetheless none of that addresses how Fb intends to divulge this files or what kind of “research” this could maybe also be damaged-down for. Certain, this could additional the social network’s working out of augmented fact, but there’s a complete bunch else that incorporates that. As the digital rights group Electronic Frontier Foundation (EFF) noted in a fresh blog post, investigate cross-test monitoring alone has a astronomical decision of implications beyond the core functions of an AR or VR headset. Our eyes can characterize how we’re thinking and feeling — not upright what we’re .

As the EFF’s Rory Mir and Katitza Rodriguez outlined within the post:

How we circulation and have interaction with the enviornment presents perception, by proxy, into how we predict and feel on the 2d. If aggregated, these as a lot as speed of this biometric files could maybe be in a position to name patterns that enable them more precisely predict (or motive) certain behavior and even feelings within the virtual world. It could perchance maybe enable firms to make the most of customers’ emotional vulnerabilities via recommendations that are sophisticated for the user to appear at and resist. What makes the sequence of this form of biometric files critically gruesome, is that not like a credit card or password, it’s files about us we are going to not alternate. As soon as unruffled, there is puny customers can attain to mitigate the damage performed by leaks or files being monetized with extra parties.

There’s also a more sparkling undertaking, in step with Rodriguez and Mir. That’s “bystander privacy,” or the like minded kind to privacy in public. “I’m concerned that if the protections aren’t the like minded kind ones, with this abilities, shall we additionally be building a surveillance society the place customers lose their privacy in public areas,” Rodriguez, Global Rights Director for EFF, informed Engadget. “I accept as true with these firms are going to push for impress fresh changes in society of how we behave in public areas. And they identify on to be method more transparent on that front.”

In an announcement, a Fb spokesperson stated that “Project Aria is a research utility that can again us manufacture the safeguards, policies and even social norms distinguished to govern the usage of AR glasses and diversified future wearable gadgets.”

Fb is a lot from the handiest company to grapple with these questions. Apple, also reportedly engaged on an AR headset, also appears to be like to be experimenting with investigate cross-test monitoring. Amazon, on the diversified hand, has taken a clear come in the case of the flexibility to value our emotional relate.

Dangle into legend its most modern wearable: Halo. Initially look for, the instrument, which is an proper product folks will quickly be in a position to divulge, appears to be like grand closer to the forms of wrist-historical gadgets that are already broadly accessible. It goes to ascertain your coronary heart charge and track your sleep. It also has one diversified characteristic you won’t procure for your commonplace Fitbit or smartwatch: tone prognosis.

Opt in and the wearable will passively hear to your roar within the course of the day in characterize to “analyze the positivity and energy of your roar.” It’s speculated to motivate for your overall effectively being, in step with Amazon. The corporate suggests that the characteristic will “again customers price how they sound to others,” and “make stronger emotional and social effectively-being and again make stronger communication and relationships.”

Amazon

If that sounds vaguely dystopian, you’re not alone, the characteristic has already sparked a few Sunless Think comparison. Additionally touching on: history has many times taught us that every person these programs in total prove being extraordinarily biased, irrespective of the creator’s intent. As Protocol recommendations out, AI programs are inclined to be barely monstrous at treating girls folks and folks of color the identical draw they treat white males. Amazon itself has struggled with this. A see final year from MIT’s Media lab found that Amazon’s facial recognition tech had a onerous time precisely identifying the faces of darkish-skinned girls folks. And a 2019 Stanford see found racial disparities in Amazon’s speech recognition tech.

So whereas Amazon has stated it uses diverse files to coach its algorithms, it’s removed from guaranteed that this could treat all its customers the identical in apply. Nonetheless despite the indisputable fact that it did treat everyone barely, giving Amazon a correct away line into your emotional relate could maybe even occupy serious privacy implications.

And never upright because it’s creepy for the enviornment’s finest retailer to know the strategy you’re feeling at any given 2d. There’s also the clear possibility that Amazon could maybe, one day, divulge these newfound insights to receive you to in discovering more stuff. Correct because there’s right now no hyperlink between Halo and Amazon’s retail carrier or Alexa, doesn’t mean that can steadily be the case. Genuinely, we know from patent filings Amazon has given the foundation more than a passing concept.

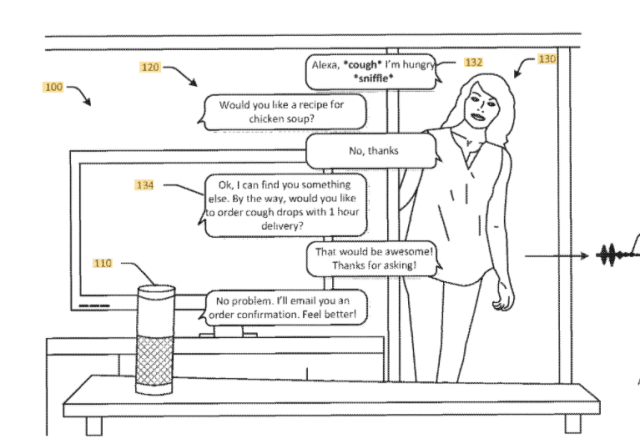

The corporate used to be granted a patent two years ago that lays out intimately how Alexa could maybe proactively counsel merchandise in response to how your roar sounds. The patent describes a system that would enable Amazon to detect “an irregular physical or emotional condition” in response to the sound of a roar. It could perchance maybe then counsel pronounce material, floor ads and counsel merchandise in response to the “abnormality.” Patent filings aren’t essentially indicative of proper plans, but they attain supply a window into how a company is serious a few articulate kind of abilities. And in Amazon’s case, its tips for emotion detection are more than a puny alarming.

An Amazon spokesperson informed Engadget that “we attain not divulge Amazon Halo effectively being files for marketing, product recommendations, or promoting,” but declined to utter on future plans. The patent presents some possible clues, though.

Google Patents/Amazon

“A most up-to-date physical and/or emotional condition of the user could maybe facilitate the flexibility to present highly focused audio pronounce material, just like audio adverts or promotions,” the patent states. “Shall we embrace, certain pronounce material, just like pronounce material associated to cough drops or flu medication, could maybe be focused in direction of customers who occupy sore throats.”

In but any other instance — helpfully illustrated by Amazon — an Echo-admire instrument recommends a rooster soup recipe when it hears a cough and a sniffle.

As unsettling as that sounds, Amazon makes certain that it’s not handiest taking the sound of your roar into legend. The patent notes that it will in all probability in all probability maybe additionally divulge your looking out and eradicate history, “decision of clicks,” and diversified metadata to rental pronounce material. In diversified words: Amazon would divulge not upright your perceived emotional relate, but all the issues else it is conscious of about you to rental merchandise and ads.

Which brings us again to Fb. No matter product Aria ultimately becomes, it’s impossible now, in 2020, to fathom a version of this that won’t violate our privacy in fresh and creative ways in characterize to feed into Fb’s already disturbingly-right advert machine.

Fb’s mobile apps already vacuum up an fabulous quantity of files relating to the place we scramble, what we in discovering and upright about all the issues else we attain on the net. The corporate will occupy desensitized us ample at this characterize steal that for granted, alternatively it’s price taking into account how method more we’re prepared to give away. What occurs when Fb is conscious of not upright the place we scramble and who we look for, but all the issues we see at?

A Fb spokesperson stated the company would “be up front about any plans associated to ads.”

“Project Aria is a research effort and its motive is to again us definitely price the hardware and utility wished to have AR glasses – to not personalize ads. Within the match any of this abilities is built-in proper into a commercially accessible instrument within the long run, we are able to be up front about any plans associated to ads.”

A promise of transparency, alternatively, is a lot diversified than an assurance of what goes on to happen to our files. And it highlights why privacy regulations is so vital — because with out it, we’ve puny different than to steal a company’s note for it.

“Fb is positioning itself to be the Android of AR VR,” Mir stated. “I accept as true with because they’re of their infancy, it makes sense that they are taking precautions to retain files separate from promoting and all these items. Nonetheless the undertaking is, as soon as they attain control the medium or occupy an Android-stage control of the market, at that level, how are we guaranteeing that they are sticking to factual privacy practices?”

And the search info from factual privacy practices handiest becomes more urgent must you’re taking into legend how method more files firms admire Fb and Amazon are poised to occupy receive admission to to. Products admire Halo and research tasks admire Aria could maybe be experimental for now, but that will not be going to steadily be the case. And, within the absence of stronger guidelines, there’ll be puny struggling with them from the usage of these fresh insights about us to additional their dominance.

“There are no federal privacy authorized recommendations within the USA,” Rodriguez stated. ”Of us depend on privacy policies, but privacy policies alternate over time.”

All merchandise truly useful by Engadget are selected by our editorial team, self ample of our dad or mum company. A couple of of our reviews encompass affiliate links. If you in discovering one thing via one in every of these links, shall we manufacture an affiliate charge.