What’s federated learning?

The Remodel Know-how Summits launch October 13th with Low-Code/No Code: Enabling Endeavor Agility. Register now!

In partnership with Paperspace

One in every of the fundamental challenges of machine learning is the want for dapper portions of data. Gathering practising datasets for machine learning models poses privateness, security, and processing dangers that organizations would fairly steer clear of.

One approach that can again tackle some of these challenges is “federated learning.” By distributing the practising of models across person devices, federated learning makes it seemingly to steal honest real thing about machine learning whereas minimizing the must salvage person knowledge.

Cloud-based fully machine learning

The veteran task for creating machine learning functions is to net a dapper dataset, recount a model on the knowledge, and flee the trained model on a cloud server that customers can attain by design of replacement functions similar to net search, translation, text know-how, and image processing.

On every occasion the software program wishes to make employ of the machine learning model, it has to ship the person’s knowledge to the server where the model resides.

In many situations, sending knowledge to the server is inevitable. For example, this paradigm is inevitable for bid material advice programs attributable to phase of the knowledge and bid material wished for machine learning inference resides on the cloud server.

However in functions similar to text autocompletion or facial recognition, the knowledge is native to the person and the instrument. In these situations, it’d be preferable for the knowledge to remain on the person’s instrument rather then being despatched to the cloud.

Fortunately, advances in edge AI occupy made it seemingly to lead clear of sending sensitive person knowledge to software program servers. Furthermore is known as TinyML, right here is an packed with life home of research and tries to manufacture machine learning models that match on smartphones and other person devices. These models atomize it seemingly to place on-instrument inference. Good tech firms are trying to bring some of their machine learning functions to customers’ devices to pork up privateness.

On-instrument machine learning has so a lot of added benefits. These functions can proceed to work even when the instrument is now not connected to the net. They also provide the ultimate thing about saving bandwidth when customers are on metered connections. And in many functions, on-instrument inference is extra energy-ambiance friendly than sending knowledge to the cloud.

Practising on-instrument machine learning models

On-instrument inference is a essential privateness upgrade for machine learning functions. However one challenge remains: Builders tranquil want knowledge to coach the models they are going to push on customers’ devices. This doesn’t pose an challenge when the group creating the models already owns the knowledge (e.g., a financial institution owns its transactions) or the knowledge is public knowledge (e.g., Wikipedia or news articles).

However if a firm wishes to coach machine learning models that possess confidential person knowledge similar to emails, chat logs, or private photos, then collecting practising knowledge entails many challenges. The firm may perhaps occupy to make certain that its series and storage coverage is conformant with the many knowledge protection rules and is anonymized to retract in my opinion identifiable knowledge (PII).

Once the machine learning model is trained, the developer group must atomize choices on whether it can protect or discard the practising knowledge. They’re going to even occupy to occupy a coverage and task to proceed collecting knowledge from customers to retrain and change their models on a customary basis.

This is the challenge federated learning addresses.

Federated learning

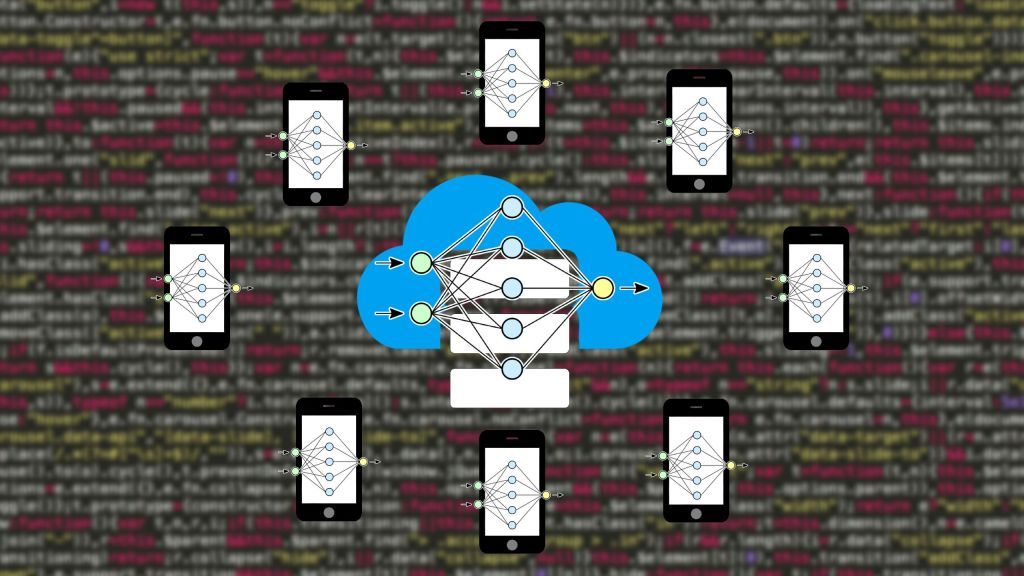

The fundamental conception at the again of federated learning is to coach a machine learning model on person knowledge without the must switch that knowledge to cloud servers.

Federated learning starts with a outrageous machine learning model in the cloud server. This model is both trained on public knowledge (e.g., Wikipedia articles or the ImageNet dataset) or has now not been trained the least bit.

In the following stage, so a lot of person devices volunteer to coach the model. These devices pick person knowledge that’s connected to the model’s software program, similar to chat logs and keystrokes.

These devices salvage the outrageous model at a real time, for instance when they are on a wi-fi network and are connected to a energy outlet (practising is a compute-intensive operation and should drain the instrument’s battery if carried out at an outrageous time). Then they recount the model on the instrument’s native knowledge.

After practising, they return the trained model to the server. Neatly-liked machine learning algorithms similar to deep neural networks and make stronger vector machines is that they’re parametric. Once trained, they encode the statistical patterns of their knowledge in numerical parameters and so they now not want the practising knowledge for inference. Which skill that of this reality, when the instrument sends the trained model again to the server, it doesn’t possess raw person knowledge.

Once the server receives the knowledge from person devices, it updates the outrageous model with the aggregate parameter values of person-trained models.

The federated learning cycle must be repeated so a lot of times sooner than the model reaches the optimal degree of accuracy that the developers want. Once the closing model is ready, it will even be dispensed to all customers for on-instrument inference.

Limits of federated learning

Federated learning would now not be aware to all machine learning functions. If the model is simply too dapper to flee on person devices, then the developer will must net other workarounds to protect person privateness.

On the opposite hand, the developers must make certain that that the knowledge on person devices are connected to the software program. The veteran machine learning constructing cycle entails intensive knowledge cleaning practices wherein knowledge engineers retract misleading knowledge parts and possess the gaps where knowledge is missing. Practising machine learning models on beside the point knowledge can ticket extra wretchedness than accurate.

When the practising knowledge is on the person’s instrument, the knowledge engineers atomize now not occupy any design of evaluating the knowledge and making obvious it can be essential to the software program. For this motive, federated learning must be miniature to functions where the person knowledge would now not want preprocessing.

One other limit of federated machine learning is knowledge labeling. Most machine learning models are supervised, which design they require practising examples that are manually labeled by human annotators. For example, the ImageNet dataset is a crowdsourced repository that contains hundreds of thousands of photos and their corresponding classes.

In federated learning, except outcomes can even be inferred from person interactions (e.g., predicting the following discover the person is typing), the developers can’t search data from customers to head out of their approach to label practising knowledge for the machine learning model. Federated learning is extra healthy fitted to unsupervised learning functions similar to language modeling.

Privateness implications of federated learning

Whereas sending trained model parameters to the server is much less privateness-sensitive than sending person knowledge, it doesn’t imply that the model parameters are fully trim of private knowledge.

Essentially, many experiments occupy confirmed that trained machine learning models may perhaps memorize person knowledge and membership inference assaults can recreate practising knowledge in some models by design of trial and mistake.

One valuable clear up to the privateness issues of federated learning is to discard the person-trained models after they are built-in into the central model. The cloud server doesn’t must store particular person models as soon because it updates its outrageous model.

One other measure that can again is to lengthen the pool of model trainers. For example, if a model must be trained on the knowledge of 100 customers, the engineers can lengthen their pool of shoes to 250 or 500 customers. For every practising iteration, the system will ship the outrageous model to 100 random customers from the practising pool. This vogue, the system doesn’t salvage trained parameters from any single person constantly.

Sooner or later, by adding a chunk of of noise to the trained parameters and using normalization tactics, developers can considerably decrease the model’s skill to memorize customers’ knowledge.

Federated learning is gaining status because it addresses some of the elemental complications of sleek artificial intelligence. Researchers are constantly buying for tag contemporary ways to occupy a study federated learning to contemporary AI functions and overcome its limits. This may perhaps be animated to behold how the field evolves in the long term.

Ben Dickson is a software program engineer and the founder of TechTalks. He writes about know-how, industrial, and politics.

This legend first and main appeared on Bdtechtalks.com. Copyright 2021

VentureBeat

VentureBeat’s mission is to be a digital town sq. for technical resolution-makers to fabricate knowledge about transformative know-how and transact.

Our set aside delivers valuable knowledge on knowledge applied sciences and techniques to data you as you lead your organizations. We invite you to change into a member of our neighborhood, to make a choice up real of entry to:

- up-to-date knowledge on the issues of hobby to you

- our newsletters

- gated thought-leader bid material and discounted pick up real of entry to to our prized events, similar to Remodel 2021: Be taught More

- networking parts, and extra