When AI flags the ruler, no longer the tumor — and assorted arguments for abolishing the gloomy box (VB Dwell)

AI helps health care specialists originate their jobs efficiently and successfully, on the change hand it wants to be venerable responsibly, ethically, and equitably. In this VB Dwell tournament, discover an in-depth point of view on the strengths and limitations of data, AI methodology and extra.

Hear extra from Brian Christian throughout our VB Dwell tournament on March 31.

Register right here totally free.

Belief to be one of many sizable disorders that exists inner AI most continuously, however is extremely acute in health care settings, is the difficulty of transparency. AI devices — shall we embrace, deep neural networks — have a recognition for being gloomy containers. That’s in particular pertaining to in a clinical surroundings, where caregivers and sufferers alike must tag why solutions are being made.

“That’s both on yarn of it’s integral to the belief within the physician-patient relationship, however additionally as a sanity take a look at, to be obvious these devices are, in actuality, studying issues they’re supposed to be studying and functioning the manner we would count on,” says Brian Christian, author of The Alignment Discipline, Algorithms to Dwell By and The Most Human Human.

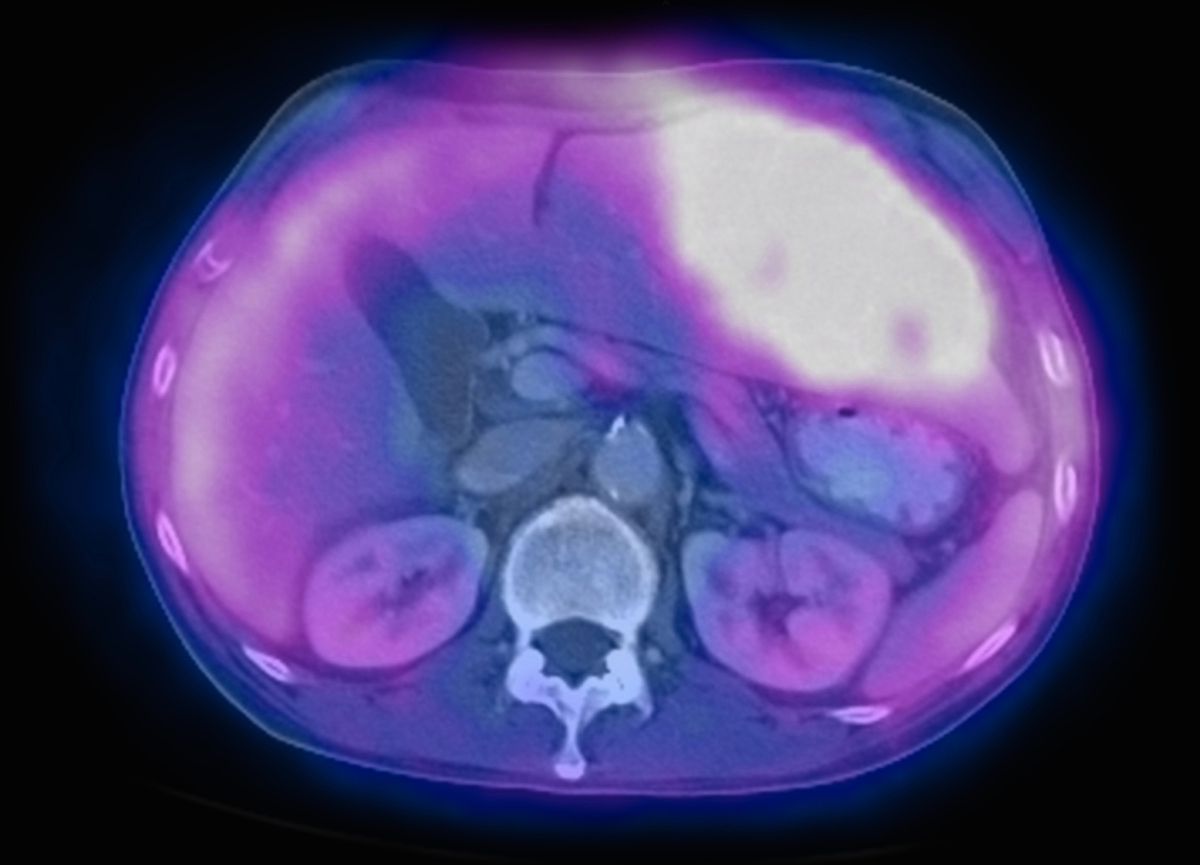

He parts to the instance of the neural network that famously had reached a level of accuracy associated to human dermatologists at diagnosing malignant pores and skin lesions. Nonetheless, a more in-depth examination of the mannequin’s saliency methods printed that the single most influential element this mannequin was as soon as procuring for in a list of anyone’s pores and skin was as soon as the presence of a ruler. Which means of clinical photos of cancerous lesions encompass a ruler for scale, the mannequin learned to title the presence of a ruler as a marker of malignancy, on yarn of that’s great more straightforward than telling the difference between assorted forms of lesions.

“It’s exactly this extra or much less element which explains worthy accuracy in a take a look at surroundings, however is fully useless within the trusty world, on yarn of sufferers don’t include rulers helpfully pre-connected when [a tumor] is malignant,” Christian says. “That’s a finest example, and it’s one of many for why transparency is needed on this surroundings in enlighten.”

At a conceptual level, one of many final discover disorders in all machine studying is that there’s nearly continuously a spot between the element which that you must readily measure and the element you for sure care about.

He parts to the mannequin developed within the 1990s by a community of researchers in Pittsburgh to estimate the severity of sufferers with pneumonia to triage inpatient vs outpatient medication. One element this mannequin learned was as soon as that, on moderate, of us with bronchial asthma who’re available in in with pneumonia have higher health outcomes as a community than non-asthmatics. Nonetheless, this wasn’t on yarn of having bronchial asthma is the big health bonus it was as soon as flagged as, however on yarn of sufferers with bronchial asthma discover elevated priority care, and additionally bronchial asthma sufferers are on high alert to whisk to their physician as quickly as they inaugurate to have pulmonary symptoms.

“If all you measure is patient mortality, the asthmatics leer take care of they come out forward,” he says. “Nonetheless even as you happen to measure issues take care of charge, or days in health facility, or comorbidities, you must per chance well well presumably discover that perchance they’ve higher mortality, however there’s great extra going on. They’re survivors, however they’re high-likelihood survivors, and that becomes certain even as you happen to inaugurate expanding the scope of what your mannequin is predicting.”

The Pittsburgh crew was as soon as the utilize of a rule-basically basically based mannequin, which enabled them to hunt this bronchial asthma connection and without lengthen flag it. They have been ready to portion that the mannequin had learned a presumably bogus correlation with the clinical doctors taking part within the project. Nonetheless if it had simply been a titanic neural network, they would per chance well no longer have known that this problematic affiliation had been learned.

Belief to be one of many researchers on that project within the 1990s, Smartly to attach Caruana from Microsoft, went motivate 20 years later with a recent situation of tools and examined the neural network he helped developed and came across a change of equally frightful associations, a lot like taking into consideration that being over 100 was as soon as correct for you, or having high blood strain was as soon as a profit. Alive to on the the same motive — that those of us got elevated-priority care.

“Having a leer motivate, Caruana says thank God we didn’t utilize this neural earn on sufferers,” Christian says. “That was as soon as the worry he had at the time, and it turns out, 20 years later, to have been fully justified. That every body speaks to the significance of getting clear devices.”

Algorithms that aren’t clear, or which could per chance well well be biased, have resulted in a diversity of misfortune experiences, which have ended in a pair asserting these programs don’t have any location in health care, however that’s a bridge too a long way, Christian says. There’s a titanic physique of proof that shows that after performed successfully, these devices are a titanic asset, and in most cases higher than particular particular person educated judgments, to boot to offering a bunch of assorted advantages.

“On the assorted hand,” explains Christian, “some are overly inquisitive relating to the embody of technology, who sing, let’s expend our palms off the wheel, let the algorithms originate it, let our pc overlords list us what to originate and let the diagram bustle on autopilot. And I focus on that is additionally going too a long way, attributable to the a titanic change of examples we’ve talked about. As I sing, we must always thread that needle.”

In assorted phrases, AI can’t be venerable blindly. It requires an data-pushed technique of constructing provably optimal, clear devices, from data, in an iterative process that attracts collectively an interdisciplinary crew of pc scientists, clinicians, patient advocates, to boot to social scientists which could per chance well well be dedicated to an iterative and inclusive process.

That additionally involves audits as soon as these programs whisk into manufacturing, since obvious correlations could per chance well destroy over time, obvious assumptions could per chance well no longer defend, and we could per chance well study extra — the final element it is advisable originate is upright flip the swap and come motivate 10 years later.

“For me, a various community of stakeholders with assorted expertise, representing assorted interests, coming collectively at the table to originate this in a considerate, cautious manner, is the manner forward,” he says. “That’s what I basically feel basically the most optimistic about in health care.”

Hear extra from Brian Christian throughout our VB Dwell tournament, “In Pursuit of Parity: A data to the responsible utilize of AI in health care” on March 31.

Register right here totally free.

Provided by Optum

You’ll study:

- What it technique to make utilize of superior analytics “responsibly”

- Why responsible utilize is so most important in health care in contrast to assorted fields

- The steps that researchers and organizations are taking this day to be obvious AI is venerable responsibly

- What the AI-enabled health diagram of the future appears to be take care of and its advantages for patrons, organizations, and clinicians

Speakers:

- Brian Christian, Author, The Alignment Discipline, Algorithms to Dwell By and The Most Human Human

- Sanji Fernando, SVP of Artificial Intelligence & Analytics Platforms, Optum

- Kyle Wiggers, AI Workers Author, VentureBeat (moderator)