Why instructing robots to play conceal-and-see steadily is the major to next-gen A.I.

Man made in kind intelligence, the postulate of an luminous A.I. agent that’s in a location to grasp and learn any psychological process that humans can raise out, has long been a component of science fiction. As A.I. will get smarter and smarter — especially with breakthroughs in machine finding out instruments which is probably going to be in a location to rewrite their code to learn from fresh experiences — it’s increasingly broadly a fragment of precise synthetic intelligence conversations in addition.

However how will we measure AGI when it does attain? Over the years, researchers include laid out a choice of probabilities. The most outstanding stays the Turing Test, at some level of which a human steal interacts, inquire of unseen, with every humans and a machine, and must strive and bet which is which. Two others, Ben Goertzel’s Robot College Pupil Test and Nils J. Nilsson’s Employment Test, see to almost test an A.I.’s skills by seeing whether it would possibly perhaps perhaps perhaps construct a college diploma or raise out location of job jobs. One more, which I would possibly well perhaps furthermore simply peaceable for my fragment relish to minimize designate, posits that intelligence is probably going to be measured by the a success capacity to assemble Ikea-kind flatpack furniture without complications.

One amongst the most spirited AGI measures changed into establish forward by Apple co-founder Steve Wozniak. Woz, as he’s identified to pals and admirers, suggests the Coffee Test. A in kind intelligence, he stated, would mean a robot that’s ready to enter any dwelling on this planet, hit upon the kitchen, brew up a fresh cup of espresso, after which pour it trusty into a mug.

As with every A.I. intelligence test, it’s seemingly you’ll perhaps furthermore argue about how gargantuan or narrow the parameters are. However, the postulate that intelligence would possibly well perhaps furthermore simply peaceable be linked to an capacity to navigate by the precise world is spirited. It’s furthermore one that a brand fresh research venture seeks to test out.

Building worlds

“In the last few years, the A.I. crew has made vast strides in practicing A.I. brokers to raise out advanced initiatives,” Luca Weihs, a research scientist at the Allen Institute for AI, an synthetic intelligence lab essentially based by the unhurried Microsoft co-founder Paul Allen, knowledgeable Digital Traits.

Weihs cited DeepMind’s pattern of A.I. brokers which is probably going to be in a location to learn to play classic Atari video games and beat human gamers at Drag. However, Weihs illustrious that these initiatives are “veritably peaceable” from our world. Reward a image of the precise world to an A.I. knowledgeable to play Atari video games, and this will likely perhaps furthermore simply haven’t any idea what it’s having a detect at. It’s here that the Allen Institute researchers assume they’ve something to present.

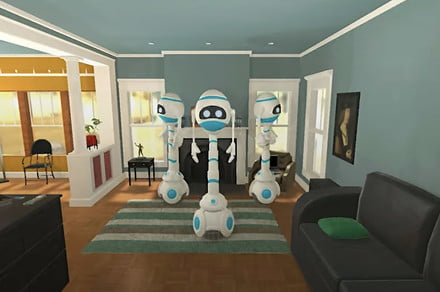

The Allen Institute for A.I. has constructed up something of an precise property empire. However this isn’t physical precise property, lots as it’s virtual precise property. It’s developed many of of virtual rooms and residences — including kitchens, bedrooms, bogs, and living rooms — at some level of which A.I. brokers can work along with thousands of objects. These areas boast sensible physics, make stronger for more than one brokers, and even states relish sizzling and wintry. By letting A.I. brokers play in these environments, the postulate is that they’ll invent up a more sensible thought of the enviornment.

“In [our new] work, we wanted to grasp how A.I. brokers would possibly well perhaps perhaps research a pragmatic environment by playing an interactive sport inner it,” Weihs stated. “To reply to this query, we knowledgeable two brokers to play Cache, a variant of conceal-and-see, the utilization of adversarial reinforcement finding out throughout the high-fidelity AI2-THOR environment. Thru this gameplay, we realized that our brokers realized to signify person photos, imminent the performance of methods requiring millions of hand-labeled photos — and even began to originate some cognitive primitives now and again studied by [developmental] psychologists.”

Guidelines of the game

Unlike accepted conceal-and-see, in Cache, the bots snatch turns hiding objects similar to lavatory plungers, loaves of bread, tomatoes, and more, every of which boast their possess person geometries. The 2 brokers — one a hider, the opposite a seeker — then compete to see if one can efficiently conceal the thing from the opposite. This comprises a choice of challenges, including exploration and mapping, working out level of view, hiding, object manipulation, and searching out for. All the pieces is precisely simulated, even down to the requirement that the hider would possibly well perhaps furthermore simply peaceable be in a location to manipulate the thing in its hand and no longer plunge it.

The utilization of deep reinforcement finding out — a machine finding out paradigm essentially essentially based on finding out to grab actions in an environment to maximize reward — the bots accumulate well and better at hiding the objects, apart from searching out for them out.

“What makes this so complicated for A.I.s is that they don’t see the enviornment the kind we raise out,” Weihs stated. “Billions of years of evolution has made it so that, at the same time as infants, our brains successfully translate photons into ideas. On the opposite hand, an A.I. starts from scratch and sees its world as a big grid of numbers which it then must learn to decode into meaning. Furthermore, unlike in chess, where the enviornment is neatly contained in 64 squares, every image seen by the agent finest captures a minute prick of the environment, and so it must mix its observations by time to construct a coherent working out of the enviornment.”

To be clear, this most in kind work isn’t about constructing a supe-luminous A.I. In movies relish Terminator 2: Judgment Day, the Skynet supercomputer achieves self-consciousness at exactly 2.14 a.m. Jap Time on August 29, 1997. However the date, now almost a quarter century in our collective rearview mirror, it looks no longer going that there would possibly well be the kind of precise tipping level when accepted A.I. becomes AGI. As a replace, increasingly computational fruits — low-striking and high-striking — will be plucked until we lastly include something imminent a generalized intelligence across more than one domains.

Hard stuff is modest, easy stuff is onerous

Researchers include historically gravitated toward advanced complications for A.I. to resolve essentially essentially based on the postulate that, if the annoying complications would possibly well perhaps furthermore simply also be sorted, the easy ones shouldn’t be too a long way in the support of. Whenever you happen to would possibly well perhaps furthermore simulate the selection-making of an grownup, can suggestions relish object permanence (the postulate that objects peaceable exist once we can’t see them) that a child learns throughout the main few months of its existence actually designate that complicated? The reply is for sure — and this paradox that, in phrases of A.I., the onerous stuff is at all times easy, and the easy stuff is onerous, is what work similar to this sets out to address.

“The commonest paradigm for practicing A.I. brokers [involves] vast, manually labeled datasets narrowly focused to a single process — as an illustration, recognizing objects,” stated Weihs. “Whereas this vogue has had good success, I judge it’s optimistic to assume that we can manually include sufficient datasets to invent an A.I. agent that can act intelligently in the precise world, talk with humans, and resolve all kinds of complications that it hasn’t encountered before. To raise out this, I assume we can must let brokers learn the main cognitive primitives we snatch with out a consideration by allowing them to freely work along with their world. Our work displays that the utilization of gameplay to inspire A.I. brokers to work along with and detect their world ends up in them starting up to learn these primitives — and thereby displays that gameplay is a promising route faraway from manually abeled datasets and in the direction of experiential finding out.”

A paper describing this work will be supplied at the upcoming 2021 Global Convention on Finding out Representations.

Editors’ Solutions

-

The BigSleep A.I. is relish Google Image Search for photos that don’t exist but -

New A.I. can name the tune you’re listening to by finding out your mind waves -

Scientists are the utilization of A.I. to include synthetic human genetic code -

Suave fresh A.I. system promises to coach your canine while you’re faraway from dwelling -

Nvidia RTX DLSS: All the pieces you’ll want to grasp