Cerebras launches original AI supercomputing processor with 2.6 trillion transistors

Join Change into 2021 this July 12-16. Register for the AI tournament of the one year.

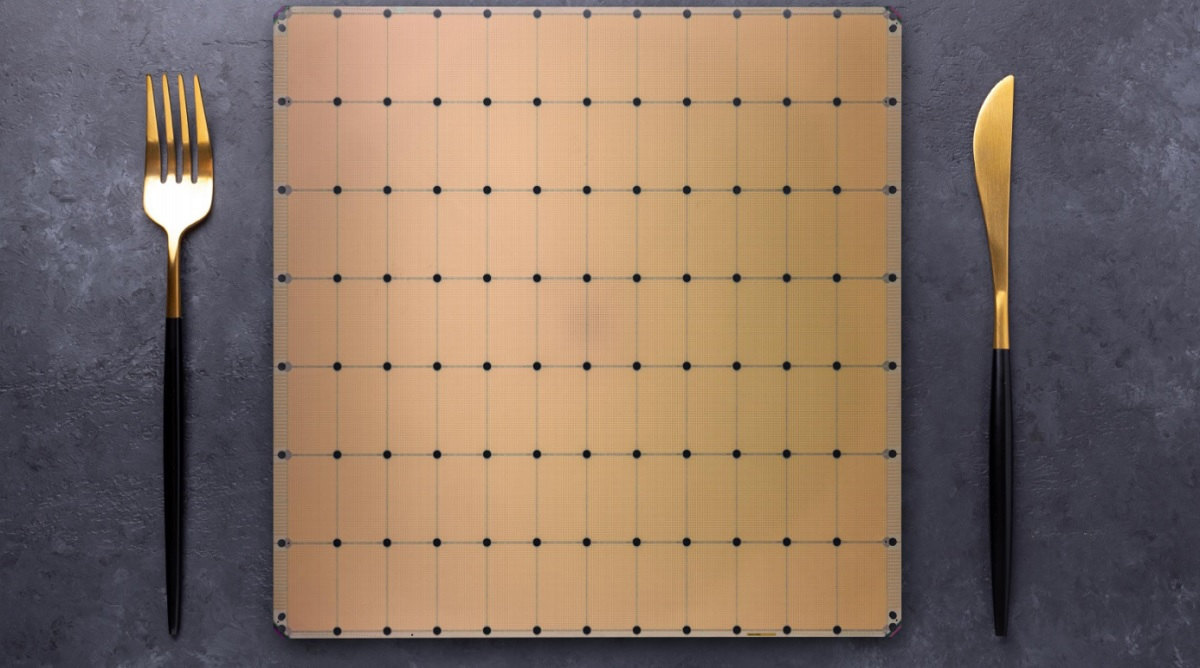

Cerebras Programs has unveiled its original Wafer Scale Engine 2 processor with a fable-surroundings 2.6 trillion transistors and 850,000 AI-optimized cores. It’s constructed for supercomputing responsibilities, and it’s the 2d time since 2019 that Los Altos, California-basically basically based mostly Cerebras has unveiled a chip that is surely a entire wafer.

Chipmakers assuredly cut a wafer from a 12-stride-diameter ingot of silicon to route of in a chip factory. As soon as processed, the wafer is sliced staunch into a entire bunch of separate chips that can even be used in digital hardware.

But Cerebras, started by SeaMicro founder Andrew Feldman, takes that wafer and makes a single, big chip out of it. Each fragment of the chip, dubbed a core, is interconnected in a fancy technique to utterly different cores. The interconnections are designed to take care of up your entire cores acting at high speeds so the transistors can work together as one.

Twice as fair as the CS-1

Above: Comparing the CS-1 to one of the best doubtless GPU.

Image Credit: Cerebras

In 2019, Cerebras could fit 400,000 cores and 1.2 billion transistors on a wafer chip, the CS-1. It changed into constructed with a 16-nanometer manufacturing route of. However the original chip is constructed with a high-cease 7-nanometer route of, that scheme the width between circuits is seven billionths of a meter. With such miniaturization, Cerebras can cram significant extra transistors within the the same 12-stride wafer, Feldman acknowledged. It cuts that circular wafer staunch into a sq. that is eight inches by eight inches, and ships the scheme in that impress.

“Now we possess 123 instances extra cores and 1,000 instances extra memory on chip and 12,000 instances extra memory bandwidth and 45,000 instances extra cloth bandwidth,” Feldman acknowledged in an interview with VentureBeat. “We were aggressive on scaling geometry, and we made a dwelling of microarchitecture enhancements.”

Now Cerebras’ WSE-2 chip has extra than twice as many cores and transistors. By comparison one of the best doubtless graphics processing unit (GPU) has handiest 54 billion transistors — 2.55 trillion fewer transistors than the WSE-2. The WSE-2 additionally has 123 instances extra cores and 1,000 instances extra high performance on-chip high memory than GPU rivals. Loads of the Cerebras cores are redundant in case one phase fails.

“That is a colossal fulfillment, especially when indignant about that the sphere’s third finest chip is 2.55 trillion transistors smaller than the WSE-2,” acknowledged Linley Gwennap, critical analyst at The Linley Group, in a observation.

Feldman half of-joked that this may maybe occasionally maybe maybe aloof enlighten that Cerebras is no longer a one-trick pony.

“What this avoids is your entire complexity of searching to tie together a entire bunch itsy-bitsy things,” Feldman acknowledged. “Even as you happen to pray to abolish a cluster of GPUs, it is advisable to unfold your mannequin within the course of extra than one nodes. Or no longer it is a ways very well-known to deal with scheme memory sizes and memory bandwidth constraints and communication and synchronization overheads.”

The CS-2’s specs

Above: TSMC set up aside the CS-1 in a chip museum.

Image Credit: Cerebras

The WSE-2 will energy the Cerebras CS-2, the change’s fastest AI computer, designed and optimized for 7 nanometers and beyond. Manufactured by contract manufacturer TSMC, the WSE-2 extra than doubles all performance traits on the chip — the transistor count, core count, memory, memory bandwidth, and cloth bandwidth — over the first technology WSE. The result is that on every performance metric, the WSE-2 is orders of magnitude greater and extra performant than any competing GPU on the market, Feldman acknowledged.

TSMC set up aside the first WSE-1 chip in a museum of innovation for chip technology in Taiwan.

“Cerebras does elevate the cores promised,” Patrick Moorhead, an analyst at Moor Insights & System. “What the company is handing over is extra along the lines of extra than one clusters on a chip. It does seem to present Nvidia a crawl for its money nevertheless doesn’t crawl raw CUDA. That has change into severely of a de facto traditional. Nvidia solutions are extra versatile as properly as they’ll fit into virtually any server chassis.”

With the entire lot optimized for AI work, the CS-2 delivers extra compute performance at much less dwelling and no more energy than any completely different scheme, Feldman acknowledged. Looking on workload, from AI to high-performance computing, CS-2 delivers a entire bunch or thousands of instances extra performance than legacy doubtless decisions, and it does so at a a part of the energy arrangement and dwelling.

A single CS-2 replaces clusters of a entire bunch or thousands of graphics processing devices (GPUs) that delight in dozens of racks, expend a entire bunch of kilowatts of energy, and purchase months to configure and program. At handiest 26 inches colossal, the CS-2 fits in one-third of a ragged datacenter rack.

“Clearly, there are companies and entities attracted to Cerebras’ wafer-scale resolution for mountainous data devices,” acknowledged Jim McGregor, critical analyst at Tirias Study, in an electronic mail. “But, there are a entire bunch extra alternatives at the project level for the tens of millions of completely different AI capabilities and aloof alternatives beyond what Cerebras could deal with, which is why Nvidia has the SuprPod and Selene supercomputers.”

He added, “You additionally possess to keep in mind that Nvidia is focusing on the entire thing from AI robotics with Jenson to supercomputers. Cerebras is extra of a particular section platform. It can most likely purchase some alternatives nevertheless will no longer match the breadth of what Nvidia is focusing on. Apart from, Nvidia is selling the entire thing they’ll abolish.”

Many of prospects

Above: Comparing the original Cerebras chip to its rival, the Nvidia A100.

Image Credit: Cerebras

And the company has proven itself by birth the first technology to prospects. Over the final one year, prospects possess deployed the Cerebras WSE and CS-1, along with Argonne Nationwide Laboratory; Lawrence Livermore Nationwide Laboratory; Pittsburgh Supercomputing Heart (PSC) for its Neocortex AI supercomputer; EPCC, the supercomputing middle at the College of Edinburgh; pharmaceutical leader GlaxoSmithKline; Tokyo Electron Devices; and extra. Prospects praising the chip consist of those at GlaxoSmithKline and the Argonne Nationwide Laboratory.

Kim Branson, senior vp at GlaxoSmithKline, acknowledged in a observation that the company has elevated the complexity of the encoder devices it generates while reducing training time by 80 instances. At Argonne, the chip is getting used for most cancers learn and has lowered the experiment turnaround time on most cancers devices by extra than 300 instances.

“For drug discovery, we possess now completely different wins that we’ll be asserting over the next one year in heavy manufacturing and pharma and biotech and armed forces,” Feldman acknowledged.

The original chips will ship within the third quarter. Feldman acknowledged the company now has extra than 300 engineers, with offices in Silicon Valley, Toronto, San Diego, and Tokyo.

VentureBeat

VentureBeat’s mission is to be a digital metropolis sq. for technical decision-makers to abolish data about transformative technology and transact.

Our pickle delivers most well-known data on data applied sciences and programs to files you as you lead your organizations. We invite you to change staunch into a member of our neighborhood, to get right of entry to:

- up-to-date data on the issues of curiosity to you

- our newsletters

- gated thought-leader stammer and discounted get right of entry to to our prized events, equivalent to Change into 2021: Learn Extra

- networking components, and extra